- Home

- AI Text Generator

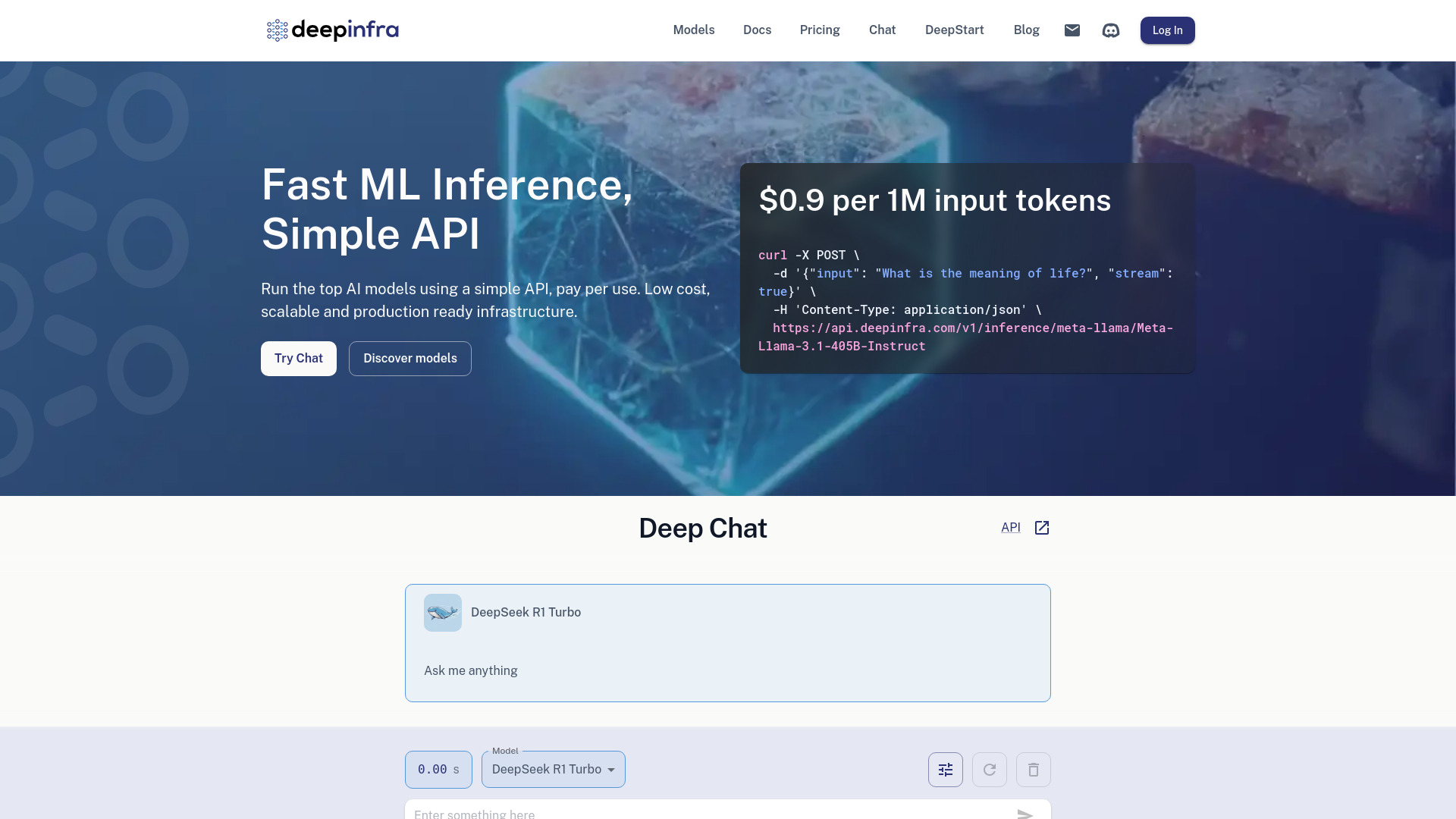

- Deep Infra

Deep Infra

Open Website-

Tool Introduction:Run top AI via simple API: pay-per-use, low latency, custom LLMs.

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

What is Deep Infra AI

Deep Infra AI is a production-ready platform for running state-of-the-art machine learning models through a simple, unified API. It delivers cost-effective, scalable, and low-latency inference so teams can add text generation, text-to-speech, text-to-image, and automatic speech recognition to products without managing GPUs or complex infrastructure. With pay-per-use pricing and the option to deploy custom LLMs on dedicated GPUs, Deep Infra AI streamlines the path from prototype to production while balancing performance, reliability, and cost.

Deep Infra AI Key Features

- Unified inference API: Access top AI models via a single, consistent endpoint for faster integration and maintenance.

- Low-latency serving: Optimized GPU inference for responsive user experiences in chat, voice, and creative apps.

- Pay-per-use pricing: Usage-based costs help control spend without upfront infrastructure commitments.

- Dedicated GPUs for custom LLMs: Deploy your fine-tuned or proprietary models on isolated GPU instances for performance and control.

- Multi-modal model catalog: Run text generation, text-to-speech, text-to-image, and ASR from one platform.

- Scalable infrastructure: Elastic capacity to handle spikes in traffic and production workloads.

- Simple deployment: Minimal setup with straightforward authentication, parameters, and streaming options.

- Monitoring and usage visibility: Track latency and consumption to optimize cost and quality.

- Flexible integration: Works with web, mobile, and backend services, CI/CD pipelines, and microservices.

Who Should Use Deep Infra AI

Deep Infra AI suits product teams, ML engineers, and startups that need fast, reliable AI inference without building their own GPU stack. it's ideal for chat and agent features, voice assistants, transcription services, creative and marketing tools, and applications that combine text, image, and speech. Enterprises looking to host custom LLMs with predictable performance and isolation on dedicated GPUs will also benefit.

How to Use Deep Infra AI

- Sign up and obtain an API key from your account dashboard.

- Choose a model (e.g., LLM for text, TTS, ASR, or text-to-image) from the catalog.

- Integrate the inference API in your app and set core parameters (prompt, temperature, max tokens, voice/style, etc.).

- Optionally enable streaming for real-time responses in chat or voice use cases.

- If needed, deploy a custom LLM on dedicated GPUs and point your application to the new endpoint.

- Test latency and quality, then refine prompts or settings for your target UX.

- Monitor usage and performance, set limits or batching strategies, and move to production.

Deep Infra AI Industry Use Cases

Customer support teams can power chat assistants with text generation while controlling costs at scale. Media and marketing platforms can generate on-brand visuals via text-to-image and produce voice-overs with text-to-speech. Voice and communications apps use automatic speech recognition for transcription, call analytics, and real-time captions. Product teams deploy custom LLMs on dedicated GPUs to ship proprietary features with consistent performance.

Deep Infra AI Pricing

Deep Infra AI uses a pay-per-use pricing model for API inference, letting teams pay only for what they run. For bespoke workloads, you can deploy custom LLMs on dedicated GPUs, with costs tied to allocated compute and usage. Refer to the official pricing page for current rates and details.

Deep Infra AI Pros and Cons

Pros:

- Simple, unified AI inference API across multiple modalities.

- Low-latency inference suitable for real-time applications.

- Pay-per-use controls spend and removes upfront infrastructure costs.

- Option to run custom LLMs on dedicated GPUs for isolation and performance.

- Scalable and production-ready for bursty and steady workloads.

Cons:

- Dependency on a third-party service for uptime and model availability.

- Network conditions can affect end-to-end latency for global users.

- Costs may rise with heavy traffic or very large models if not optimized.

- Prompt and parameter tuning are needed to achieve consistent quality.

Deep Infra AI FAQs

-

What types of models does Deep Infra AI support?

It supports text generation, text-to-speech, text-to-image, and automatic speech recognition through a unified API.

-

Can I deploy my own custom LLM?

Yes. You can host custom or fine-tuned LLMs on dedicated GPUs and access them via secure endpoints.

-

How is pricing structured?

Pricing is pay-per-use for API inference, with dedicated GPU deployments priced based on allocated compute and usage.

-

Does it support streaming responses?

Streaming is commonly available for real-time experiences like chat and voice, reducing perceived latency.

-

How do I optimize latency and cost?

Use streaming where applicable, tune parameters, cache results, and choose dedicated GPUs for steady, high-throughput workloads.

-

What integration options are available?

You can call the API from web, mobile, or backend services, and automate deployments via your CI/CD pipeline.