- Home

- AI Image Generator

- Modal

Modal

Open Website-

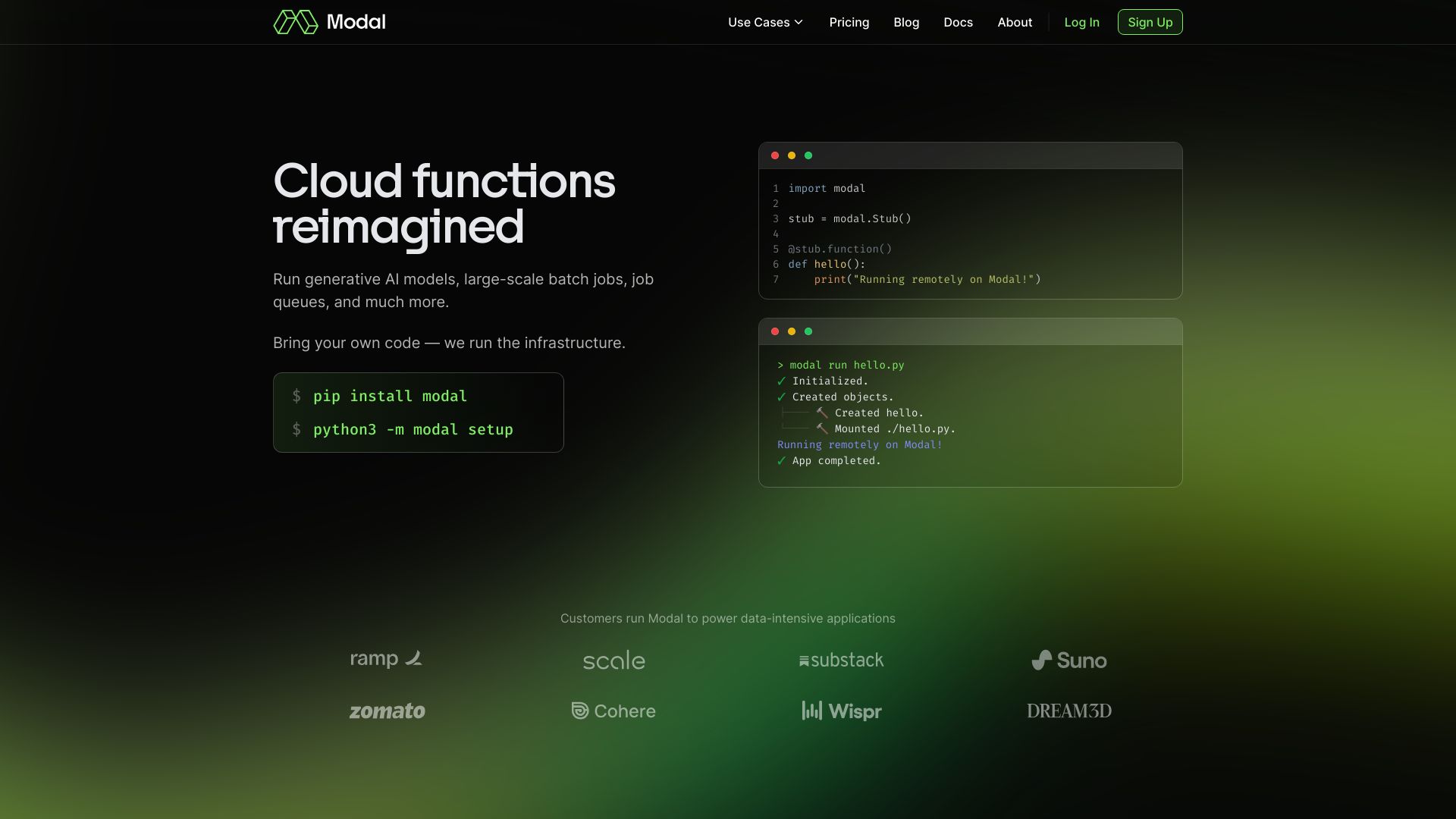

Tool Introduction:Serverless AI infra for your code; GPU/CPU at scale with instant autoscaling

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

What is Modal AI

Modal AI is a serverless platform for AI and data teams that delivers high-performance infrastructure without managing clusters. Bring your own code and run CPU, GPU, and data-intensive compute at scale with instant autoscaling. With sub-second container starts, ephemeral environments, and zero config files, it streamlines ML inference, batch data jobs, and experimentation. By abstracting provisioning while preserving control over images, dependencies, and performance, Modal helps teams ship reliable AI systems faster and more cost-effectively.

Modal AI Main Features

- Serverless CPU/GPU compute: Run inference and data pipelines on-demand with automatic scaling up and down to zero.

- Sub-second container starts: Fast cold-start times reduce latency for real-time ML APIs and interactive workloads.

- Code-first, zero config files: Deploy from code without YAML; bring your own containers and dependencies.

- Inference endpoints: Expose models as HTTP endpoints or webhooks for low-latency serving.

- Batch jobs and workflows: Execute ETL, feature generation, and distributed map-style tasks at scale.

- Scheduling and triggers: Run recurring jobs or event-driven pipelines without managing cron or queues.

- Observability: Stream logs, track runs, and monitor performance and concurrency to troubleshoot quickly.

- Secrets and configuration: Manage environment variables and credentials securely across environments.

- Cost controls: Concurrency limits and autoscaling policies to optimize spend on CPU/GPU resources.

Who Is Modal AI For

Modal AI suits ML engineers serving models, data engineers running pipelines, full-stack and backend teams adding AI features, startups needing elastic GPU capacity, and research groups moving from notebooks to production without maintaining Kubernetes or bespoke infra.

How to Use Modal AI

- Sign up and install the CLI/SDK, then authenticate your workspace.

- Prepare your application code and dependencies; define a container image or base runtime.

- Declare functions, jobs, or endpoints and set resources (CPU, GPU type, memory, timeouts).

- Deploy to create a serverless endpoint for inference or a job for batch/data processing.

- Configure autoscaling, concurrency, and triggers (HTTP, schedules, events).

- Run workloads, stream logs, and inspect metrics to validate performance.

- Iterate on images and code; set secrets and environment variables for secure access to data.

Modal AI Industry Use Cases

E-commerce teams serve real-time recommendation and ranking models as low-latency endpoints. Media companies run large-scale batch embedding generation for semantic search and content moderation. Fintech groups orchestrate nightly ETL and feature pipelines with scheduled jobs. Computer vision teams deploy GPU-backed inference for image/video processing and analytics. Product teams expose LLM-based APIs for chat, summarization, and retrieval-augmented generation.

Modal AI Pricing

Modal AI commonly follows usage-based billing where you pay for the compute and resources you consume (e.g., CPU/GPU time, memory, storage, and network). Teams can scale costs with demand without long-term commitments. For current rates, plan tiers, and any free trial availability, refer to the official pricing page.

Modal AI Pros and Cons

Pros:

- Sub-second container startup and scale-to-zero for responsive, cost-efficient workloads.

- Automatic autoscaling for both inference and data jobs.

- Code-first deployment with no YAML and full control over images and dependencies.

- Seamless access to serverless GPUs for deep learning use cases.

- Strong observability and straightforward debugging through logs and run metadata.

- Reduces operational overhead versus managing Kubernetes or dedicated clusters.

Cons:

- Cold starts may still affect large images or heavy initialization paths.

- Less low-level control than self-managed infrastructure.

- Potential vendor lock-in around APIs and deployment model.

- GPU availability and quotas can vary by region and demand.

- Data egress and dependency size can impact costs and startup times.

Modal AI FAQs

-

Question 1: Can I use my own Docker image?

Yes. You can bring your own image or build from a base image to control system libraries, CUDA versions, and Python dependencies.

-

Question 2: Does it support real-time inference?

Modal provides HTTP endpoints with instant autoscaling and sub-second starts designed for low-latency model serving.

-

Question 3: How do I schedule recurring data jobs?

You can configure schedules or event triggers to run ETL, feature generation, and maintenance tasks on a cadence.

-

Question 4: What GPUs are available?

Modal offers serverless GPU options for deep learning workloads. Available types and quotas depend on current capacity and region.

-

Question 5: How is cost controlled?

Use autoscaling policies, concurrency limits, and scale-to-zero. Monitor logs and metrics to optimize image sizes and run times.