LiteLLM

Open Website-

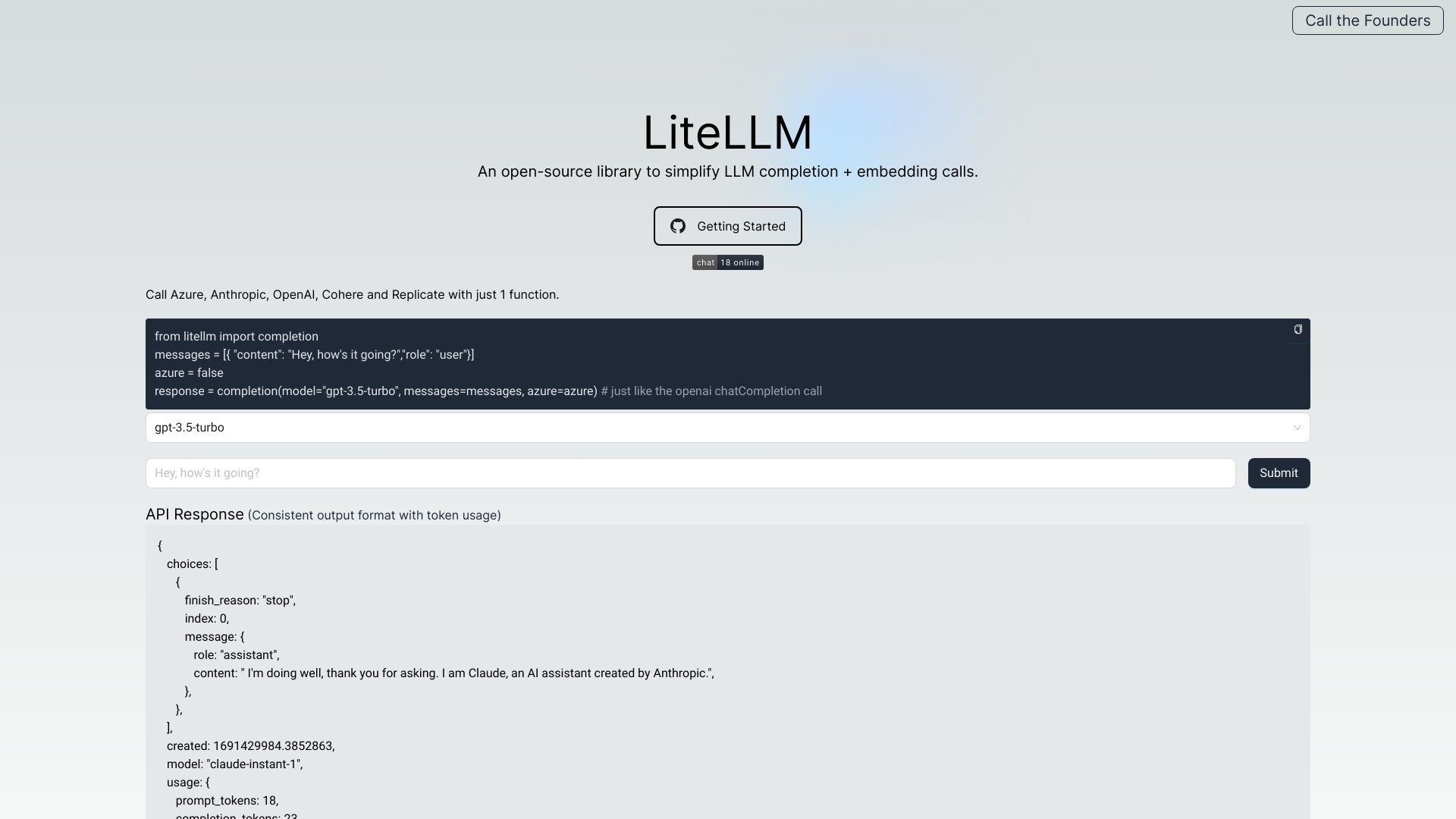

Tool Introduction:OpenAI proxy LLM gateway for 100+ models—auth, load balance, cost tracking.

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

What is LiteLLM AI

LiteLLM AI is an LLM gateway and OpenAI-compatible proxy that unifies access to 100+ large language models from providers like OpenAI, Azure, Anthropic, Cohere, Google, and Replicate. It centralizes authentication, routing, and load balancing while preserving the familiar OpenAI request/response schema. Teams gain consistent outputs and exceptions across vendors, plus unified logging, error tracking, rate limiting, and model access controls. Key capabilities include cost and spend tracking, budgets, guardrails, prompt management, pass-through endpoints, S3 logging, a batches API, and deep LLM observability.

LiteLLM AI Main Features

- OpenAI-format gateway: Call diverse LLMs through a single, OpenAI-compatible API for minimal code changes.

- Multi-provider routing: Balance traffic across OpenAI, Azure, Cohere, Anthropic, Google, Replicate, and more.

- Spend and cost tracking: Monitor usage by model, team, and key; set budgets to prevent overruns.

- Rate limiting and quotas: Enforce per-key, per-model, or per-tenant limits to manage capacity and control costs.

- Guardrails: Add rules to constrain prompts, outputs, and model access for safer operations.

- Consistent errors and outputs: Normalize responses and exceptions across heterogeneous LLM APIs.

- LLM observability: Centralized logging, tracing, and error tracking for all requests.

- Batches API: Submit and manage high-throughput batch jobs reliably.

- Prompt management: Version, templatize, and standardize prompts across models and teams.

- S3 logging: Store logs and traces in S3 for retention, auditing, and analytics.

- Pass-through endpoints: Access provider-specific features while maintaining a unified gateway.

- Model access controls: Restrict model usage by team, environment, or key to meet governance needs.

Who Should Use LiteLLM AI

LiteLLM AI is well-suited for platform engineers, MLOps teams, and developers who need a reliable LLM gateway across multiple providers. It benefits data science and product teams seeking consistent APIs, security teams enforcing guardrails and access controls, and finance or ops teams requiring spend tracking and budgets. Use it in startups for rapid iteration or in enterprises for centralized governance, observability, and cost control at scale.

LiteLLM AI Steps to Use

- Deploy the gateway (self-host or container) and configure a base URL for your applications.

- Add provider API keys securely (e.g., environment variables or a secrets store).

- Define routing rules: select default models, set fallbacks, and enable load balancing.

- Point your app’s OpenAI SDK or HTTP client to the LiteLLM base URL to maintain the OpenAI format.

- Configure rate limits, budgets, and model access controls per team or key.

- Enable logging and observability; choose storage like S3 logging for traces and metrics.

- Set up guardrails and prompt templates to standardize quality and safety across models.

- Use the Batches API for high-throughput jobs and monitor spend with dashboards and alerts.

LiteLLM AI Industry Use Cases

Product teams implement LiteLLM AI to fail over between providers and keep features online during outages. Data science groups use it to A/B test models across vendors with consistent metrics and cost tracking. Support and ops teams route workloads to cost-effective models during peak hours with budgets and rate limiting. Compliance-focused organizations apply guardrails and access controls to standardize prompts, log to S3 for audits, and maintain observability across all LLM interactions.

LiteLLM AI Pros and Cons

Pros:

- Unified, OpenAI-compatible interface for 100+ LLMs.

- Centralized authentication, budgets, and spend visibility.

- Consistent outputs and error handling across providers.

- Strong governance: model access, rate limits, guardrails.

- Observability and logging with S3 and tracing support.

- Flexible routing with load balancing, fallbacks, and pass-through.

Cons:

- Additional gateway layer introduces operational overhead.

- Provider-specific features may require pass-through setup and careful configuration.

- Observability and logging can add storage and privacy considerations if not tuned.

- Performance depends on network, provider latency, and routing policies.

LiteLLM AI FAQs

-

Does LiteLLM AI work with OpenAI SDKs?

Yes. Point your SDK or HTTP client to the LiteLLM base URL to keep the OpenAI request/response format.

-

How does spend tracking and budgeting work?

You can monitor usage by model, team, or key and apply budgets and limits to control consumption.

-

Is streaming and function/tool calling supported?

Streaming and function/tool calls are supported where the upstream provider supports them, via the unified gateway.

-

Can I log requests to my own storage?

Yes. Configure observability and enable S3 logging to store traces and audit data in your environment.

-

How do I add a new provider or model?

Add the provider API key, map the model in configuration, set routing or fallbacks, and call it through the same endpoint.