Synexa

Open Website-

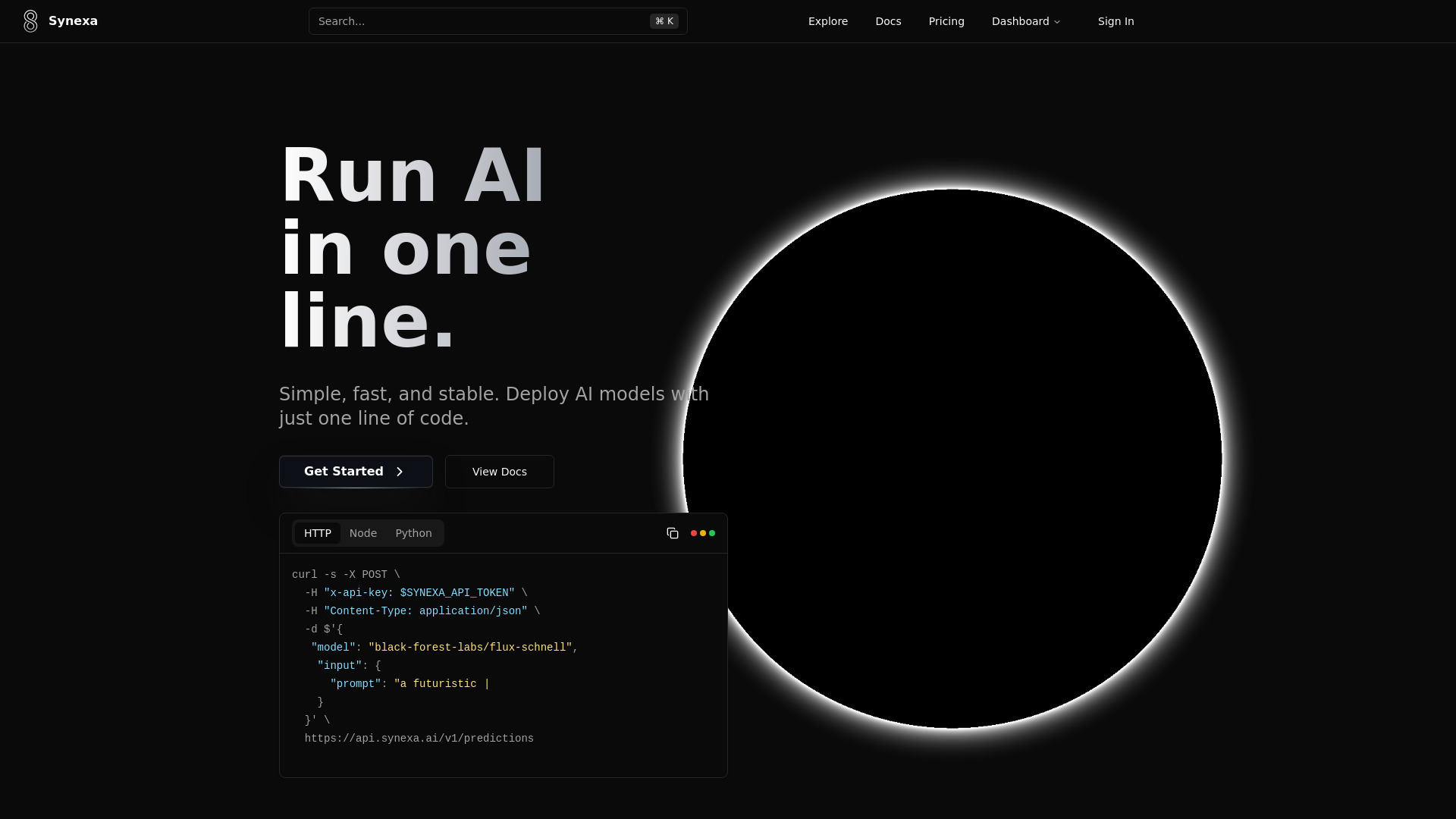

Tool Introduction:Synexa AI runs 100+ models with one line—fast GPUs, auto-scale.

-

Inclusion Date:Oct 28, 2025

-

Social Media & Email:

Tool Information

What is Synexa AI

Synexa AI is an AI deployment and infrastructure platform that lets teams run powerful models with a single line of code. Built for speed, stability, and a developer-first workflow, it abstracts GPU scheduling and autoscaling so you can move from prototype to production quickly. With a blazing-fast inference engine and access to 100+ production-ready models, Synexa streamlines hosting, routing, and monitoring while helping control spend through efficient, on-demand GPU use and a consistently smooth developer experience.

Synexa AI Main Features

- One-line integration: Launch and call models instantly with minimal setup, reducing time-to-first-inference.

- 100+ production-ready models: Access a broad catalog of LLM, vision, and speech models ready for real-world workloads.

- Blazing-fast inference engine: Optimized paths for low latency and high throughput under varied loads.

- Automatic scaling: Serverless autoscaling that adapts to traffic spikes without manual capacity planning.

- Cost-effective GPU pricing: Efficient, on-demand GPU utilization designed to keep unit economics predictable.

- Developer-friendly experience: Clean APIs, SDK-friendly ergonomics, and clear examples that shorten integration time.

- Stable production endpoints: Reliable, fault-tolerant serving to keep applications responsive in production.

- Usage visibility: Built to surface performance and usage signals that help optimize cost and latency.

Who Is Synexa AI For

Synexa AI suits software engineers, ML engineers, data scientists, and product teams who need to ship AI features quickly without managing GPU infrastructure. It fits startups validating AI ideas, SaaS companies embedding LLM or vision features, and enterprises seeking dependable, scalable inference for production-grade applications.

How to Use Synexa AI

- Create an account and obtain your API key.

- Select a model from the catalog that matches your task (e.g., text generation, image understanding, speech).

- Install the preferred SDK or use HTTPS to call the unified API.

- Run your first request with the one-line code snippet, verify output, and tune parameters.

- Configure autoscaling and hardware preferences to balance cost and latency.

- Integrate into your app or pipeline, then monitor performance and usage to optimize.

Synexa AI Industry Use Cases

Product teams embed LLM-powered chat, search, and summarization in SaaS platforms without maintaining clusters. E-commerce apps generate product copy and moderate user content at scale. Media and design tools use vision models for tagging, captioning, and background removal. Operations and support teams deploy text analytics for ticket triage and knowledge retrieval with fast, reliable inference.

Synexa AI Pricing

The platform emphasizes cost-effective GPU pricing with automatic scaling to align spend with demand. For current plan details, rate structures, and any free tiers or trials, please refer to Synexa AI’s official pricing page.

Synexa AI Pros and Cons

Pros:

- Single-line integration accelerates time to production.

- Over 100 ready-to-use models cover common AI workloads.

- Fast, stable inference engine suitable for latency-sensitive apps.

- Automatic scaling removes the burden of capacity management.

- Cost-efficient GPU utilization supports predictable economics.

- Developer-friendly APIs and examples reduce integration overhead.

Cons:

- Vendor dependence may limit deep infrastructure customization compared to self-hosting.

- High-volume workloads can still require careful cost governance.

- Model catalog breadth may not cover very niche or proprietary architectures.

- Data residency or compliance needs may require additional review.

Synexa AI FAQs

-

Which models are available?

Synexa AI provides access to an extensive catalog of 100+ production-ready models spanning language, vision, and speech tasks.

-

How does Synexa AI achieve low latency?

A blazing-fast inference engine and GPU-optimized serving pipeline help reduce response times while maintaining stability under load.

-

Can it scale automatically with traffic?

Yes. Automatic scaling adjusts capacity to match demand, minimizing manual provisioning and improving reliability during spikes.

-

Is there an SDK or unified API?

Synexa AI focuses on a developer-friendly experience with clean APIs and SDK-style ergonomics for quick integration.

-

How is pricing structured?

Synexa AI highlights cost-effective GPU pricing and on-demand scaling. For exact rates or trial availability, check the official pricing page.