AiHubMix

Open Website-

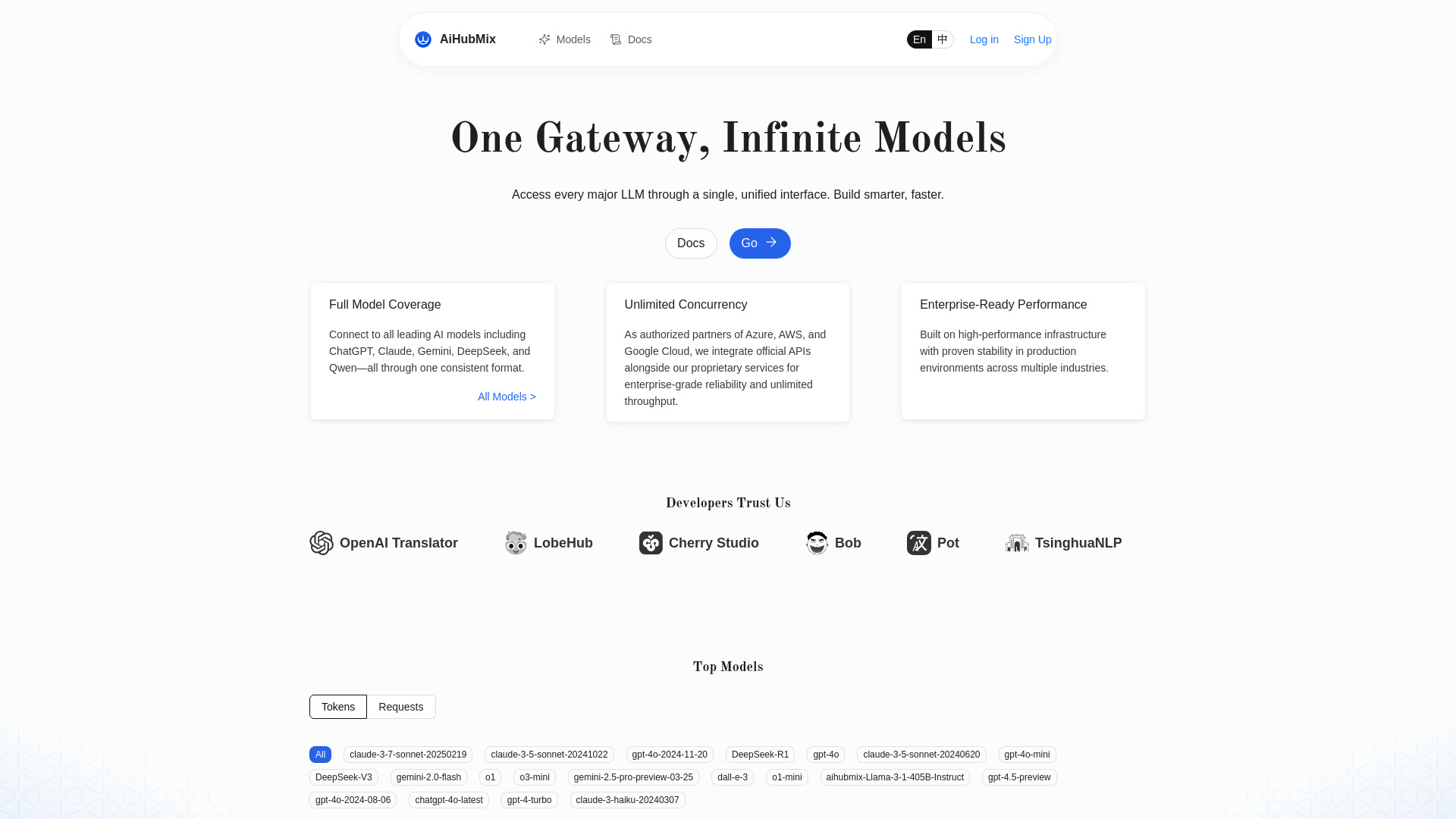

Tool Introduction:OpenAI-style API router for Gemini, Claude, Qwen; unlimited concurrency.

-

Inclusion Date:Nov 02, 2025

-

Social Media & Email:

Tool Information

What is AiHubMix

AiHubMix is an LLM API router and OpenAI API proxy that aggregates leading AI models—such as OpenAI, Google Gemini, DeepSeek, Llama, Alibaba Qwen, Anthropic Claude, Moonshot AI, and Cohere—behind a single, OpenAI-compatible interface. By offering a unified API standard, it lets teams switch models with minimal code changes, adopt the latest releases quickly, and scale with unlimited concurrency. AiHubMix streamlines multi-model integration, reduces vendor lock-in, and helps developers build intelligent applications faster and more efficiently.

Main Features of AiHubMix

- Unified OpenAI API standard: Call diverse LLMs through a single, OpenAI-compatible endpoint without rewriting your stack.

- Model aggregation: Access OpenAI, Gemini, DeepSeek, Llama, Qwen, Claude, Moonshot AI, and Cohere via one gateway.

- Latest model support: Adopt new and updated models quickly to keep applications current.

- Unlimited concurrency: Handle high-throughput workloads and bursty traffic without artificial caps.

- Easy model switching: Change providers or versions by updating the model name, not your codebase.

- Developer-friendly integration: Works with existing OpenAI SDKs and tooling for a familiar workflow.

- Consistent parameters: Standardized request/response formats simplify multi-model orchestration.

Who Can Use AiHubMix

AiHubMix is ideal for software engineers, AI product teams, and startups that need a reliable OpenAI-compatible gateway to many large language models. It suits enterprises seeking vendor flexibility, research groups comparing model performance, and agencies deploying chatbots, assistants, and content tools across multiple providers without maintaining separate integrations.

How to Use AiHubMix

- Configure your base URL to point to AiHubMix’s OpenAI-compatible endpoint.

- Select the target model (e.g., gpt-4, gemini, claude, llama, qwen) by name in your request.

- Use your existing OpenAI SDK or HTTP client to send chat/completion requests as usual.

- Tune parameters (temperature, max tokens, system prompts) and test responses.

- Scale out concurrency for batch tasks, streaming responses, or high-traffic services.

- Iterate by switching models or versions to balance quality, speed, and cost.

AiHubMix Use Cases

Teams use AiHubMix to power multi-model chatbots, compare providers for RAG and agents, run large-scale content generation pipelines, and unify embeddings or text generation across regions. Product teams can A/B test Gemini vs. Claude vs. GPT with the same code, migrate traffic between providers during spikes, and rapidly adopt new Llama or Qwen releases without refactoring.

Pros and Cons of AiHubMix

Pros:

- Single OpenAI-compatible API for many LLM providers.

- Quick model switching and rapid access to the latest models.

- Unlimited concurrency for scalable workloads.

- Reduces integration complexity and vendor lock-in.

- Works with familiar OpenAI SDKs and tooling.

Cons:

- Model-specific features may vary behind a standardized interface.

- Behavior and quality differ across providers, requiring evaluation.

- Latency and quotas can depend on upstream model providers.

FAQs about AiHubMix

-

Is AiHubMix compatible with OpenAI SDKs?

Yes. Point your SDK to the AiHubMix base URL and set the desired model name to route requests.

-

Which models are supported?

It aggregates leading models such as OpenAI, Google Gemini, DeepSeek, Llama, Alibaba Qwen, Anthropic Claude, Moonshot AI, and Cohere.

-

Can I switch models without changing code?

In most cases, you only update the model identifier, keeping the rest of your integration intact.

-

Does it support high-throughput workloads?

Yes. AiHubMix is designed for unlimited concurrency to handle spikes and large batches.