- Home

- AI Developer Tools

- Confident AI

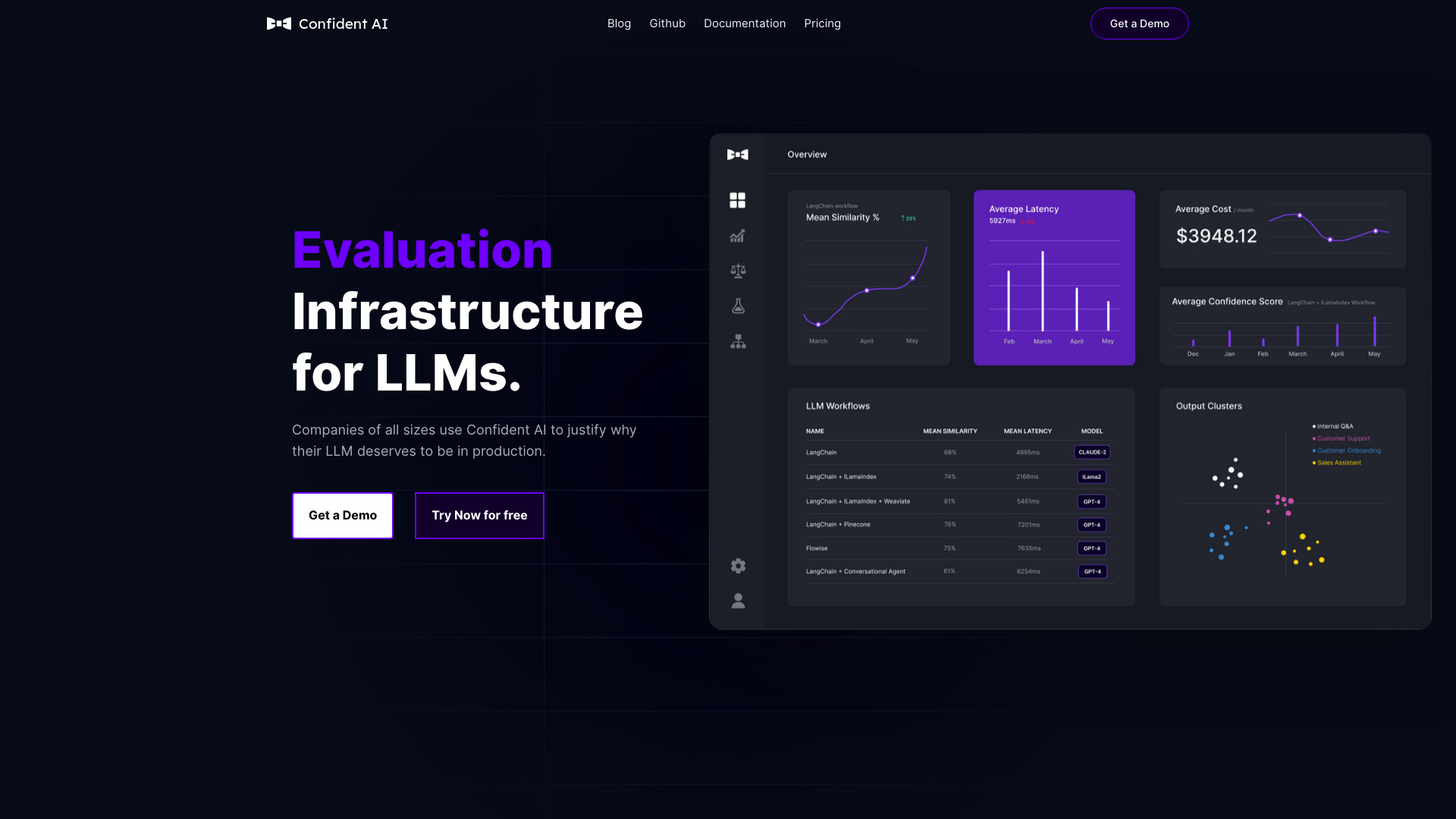

Confident AI

Open Website-

Tool Introduction:DeepEval-native LLM evaluation: 14+ metrics, tracing, dataset tooling.

-

Inclusion Date:Nov 06, 2025

-

Social Media & Email:

Tool Information

What is Confident AI

Confident AI is an all-in-one LLM evaluation platform built by the creators of DeepEval. It offers 14+ metrics to run experiments, manage datasets, trace prompts and outputs, and incorporate human feedback to continuously improve model quality. Designed to work seamlessly with the open-source DeepEval framework, it supports diverse use cases from RAG pipelines to agents. Teams use Confident AI to benchmark, monitor, and safeguard LLM applications with consistent metrics and rich tracing, streamlining dataset curation, metric alignment, and automated testing.

Main Features of Confident AI

- Comprehensive metrics suite: Evaluate quality, relevance, robustness, safety, latency, and cost across 14+ metrics for reliable LLM performance evaluation.

- Dataset management and curation: Build, version, and label datasets to align tests with real user scenarios and track changes over time.

- Experiment tracking and benchmarking: Compare prompts, models, and configurations; detect regressions and identify the best-performing variants.

- Human-in-the-loop feedback: Collect annotations and integrate human judgment to calibrate metrics and refine evaluation criteria.

- Tracing and observability: Trace inputs, context, and outputs to diagnose failures, analyze token usage, and understand model behavior.

- Automated testing and CI: Run evaluations on every change with regression gates to ship safer updates faster.

- DeepEval integration: Use the open-source DeepEval framework and flexible SDKs to instrument any LLM workflow.

- Collaboration and reporting: Share findings with stakeholders via dashboards and artifacts that demonstrate improvements.

Who Can Use Confident AI

Confident AI is built for ML engineers, LLM engineers, data scientists, QA teams, AI platform teams, and product managers who need to evaluate, benchmark, and monitor LLM applications. It fits startups validating MVPs, growth-stage teams optimizing RAG systems or agents, and enterprises standardizing LLM testing, governance, and reporting across multiple products.

How to Use Confident AI

- Connect your project using the DeepEval SDK or API and instrument your LLM pipeline for evaluation and tracing.

- Define success criteria: select metrics, thresholds, and targets aligned to your use case (e.g., relevance, faithfulness, latency, cost).

- Import or curate datasets: add real or synthetic examples, version them, and attach labels or expected behaviors.

- Configure experiments: create prompt/model variants and run evaluations locally or in CI.

- Review results: analyze dashboards, compare runs, and inspect traces to diagnose errors.

- Incorporate human feedback: add annotations to refine metrics and guide improvements.

- Automate testing: set regression gates and alerts to safeguard future releases.

Confident AI Use Cases

Teams use Confident AI to validate RAG pipelines for knowledge search, benchmark customer support assistants, assess content generation quality, and monitor chatbots for safety and robustness. In e-commerce, it evaluates product Q&A and retrieval relevance; in fintech, it helps compare models for accuracy and policy alignment; in SaaS, it supports CI-driven LLM testing to reduce regressions and control inference costs.

Pros and Cons of Confident AI

Pros:

- End-to-end LLM evaluation with 14+ metrics and robust tracing.

- Tight integration with the open-source DeepEval framework.

- Dataset curation, versioning, and human-in-the-loop feedback.

- Experiment tracking, comparisons, and CI automation for regression control.

- Helps reduce inference spend by optimizing prompts and models.

Cons:

- Initial instrumentation and dataset setup require time and engineering effort.

- Metrics must be carefully chosen to reflect business goals; poor alignment can mislead decisions.

- Evaluation runs add overhead to workflows and may increase compute usage.

FAQs about Confident AI

-

Does Confident AI work with DeepEval?

Yes. Confident AI is built by the creators of DeepEval and integrates directly with the open-source framework.

-

What types of LLM applications can it evaluate?

It supports a wide range of use cases, including RAG systems, chatbots, agents, and content generation pipelines.

-

Can I run evaluations in CI/CD?

Yes. You can automate tests with regression gates to safeguard releases and ensure consistent quality.

-

How does human feedback improve results?

Annotations align metrics with user expectations, helping refine evaluation criteria and guide iterative improvements.

-

Does it support multiple model providers?

You can instrument any model accessible through DeepEval or your APIs, enabling provider-agnostic evaluations.