- Home

- AI Code Assistant

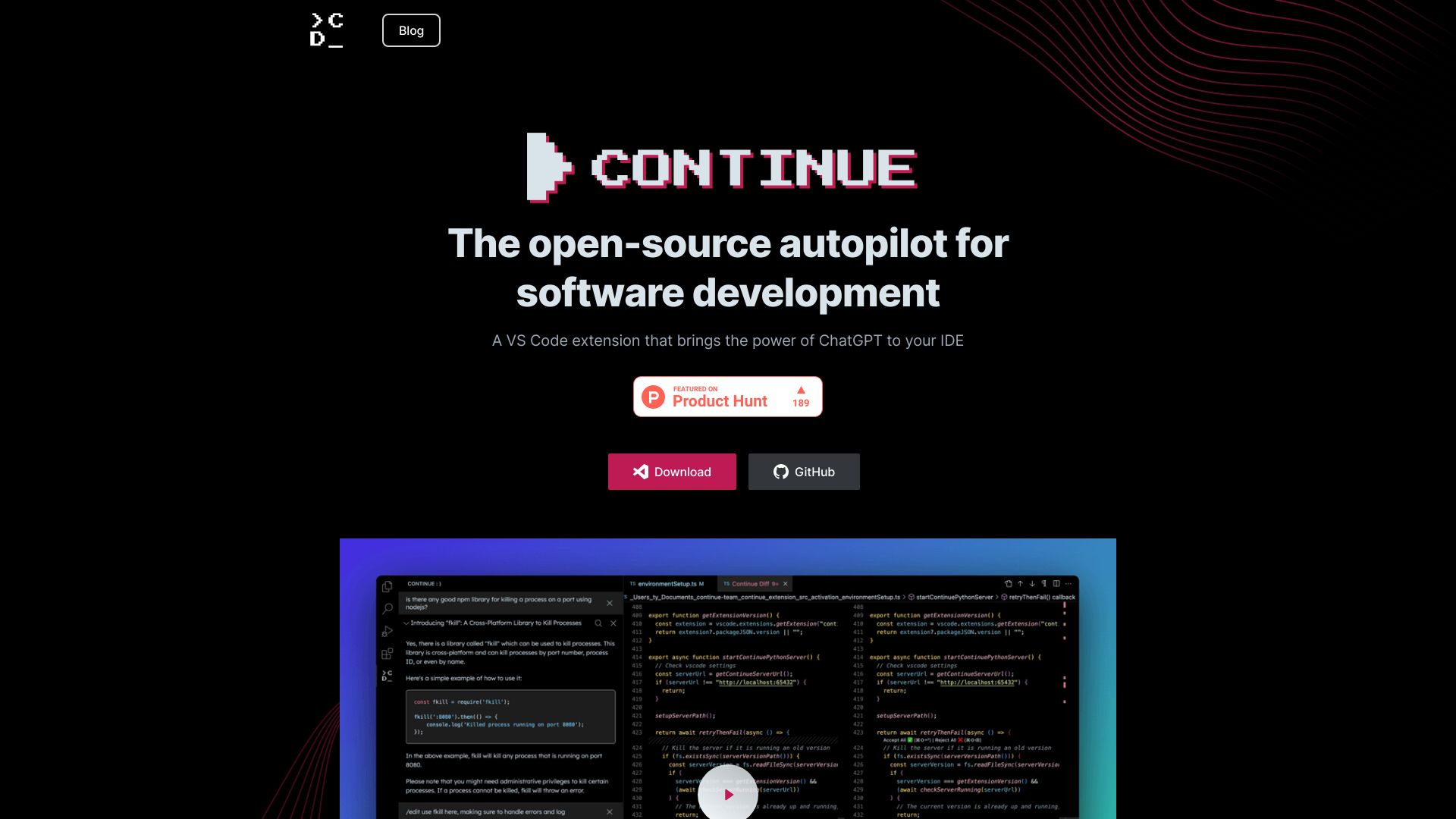

- Continue

Continue

Open Website-

Tool Introduction:AI story writing and anime image generation for boundless creativity.

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

What is Continue AI

Continue AI is an open‑source autopilot for software development that runs inside Visual Studio Code. It brings ChatGPT‑style chat and AI code autocomplete directly to your IDE, and is built to be deeply customizable. You can connect different large language models—cloud or local—and wire in rich context from your codebase and tools to get precise, context‑aware assistance. With a simple configuration, teams can build tailored prompts, commands, and behaviors that continuously learn from development data, helping you ship faster while keeping tight control over privacy and architecture.

Continue AI Main Features

- In‑IDE chat assistant: Ask questions, explain code, and generate snippets with project context without leaving VS Code.

- AI code autocomplete: Smart, context‑aware suggestions that align with your coding patterns and style.

- Custom model integration: Connect major API providers or local runtimes to use the models that fit your needs and budget.

- Configurable workflows: Define prompts, slash commands, and behaviors to match your team’s coding standards.

- Context control: Bring in relevant files, selections, diffs, and metadata so answers reflect your actual codebase.

- Privacy and governance: Keep data local with self‑hosted or on‑device models, or route selectively to external providers you trust.

- Extensible and open‑source: Transparent codebase and flexible config enable deep customization and collaboration.

Who Should Use Continue AI

Continue AI suits software engineers who want a context‑aware coding assistant inside VS Code, teams maintaining large or fast‑moving codebases, and organizations that need an open‑source, model‑agnostic solution. it's helpful for full‑stack developers, backend and frontend specialists, DevOps and data engineers, and new contributors onboarding to complex repositories.

How to Use Continue AI

- Install the Continue extension from the VS Code Marketplace.

- Open the Continue panel or command to start a chat or enable autocomplete.

- Connect a model by adding an API key or pointing to a local runtime; set your default model.

- Configure context: choose which files, selections, or repositories the assistant can reference.

- Start coding: accept autocomplete suggestions or highlight code and ask for explanations, refactors, or tests.

- Iterate and refine: tweak prompts and create custom commands to fit your workflow.

- Optional: share a team configuration so everyone uses consistent prompts and settings.

Continue AI Industry Use Cases

Product teams use Continue AI to speed up feature delivery with inline refactoring, bug‑fix suggestions, and unit test generation. Enterprises apply it to modernize legacy code by guiding cross‑file changes and explaining unfamiliar modules. Data engineering teams leverage chat to debug jobs and document pipelines. Open‑source maintainers draft pull request descriptions and code reviews faster, while keeping control over which models and contexts are used.

Continue AI Pricing

Continue AI is open‑source and free to use as a VS Code extension. If you connect commercial cloud models, usage is billed by the respective provider. Running local or self‑hosted models can reduce per‑request costs but may require compute resources. There is no per‑seat charge for using the extension itself.

Continue AI Pros and Cons

Pros:

- Open‑source, transparent, and highly customizable.

- Works natively inside VS Code for minimal context switching.

- Model‑agnostic: connect cloud or local LLMs.

- Context‑aware assistance grounded in your codebase.

- Strong privacy control with local processing options.

- Team‑friendly configuration for consistent workflows.

Cons:

- Initial configuration and model setup can take time.

- Answer quality depends on the model and provided context.

- Using cloud providers may raise data and compliance considerations.

- Local models require adequate hardware to perform well.

- Focused on VS Code; other IDEs may not be supported in the same way.

Continue AI FAQs

-

Do I need an OpenAI account to use Continue AI?

No. You can connect various providers or use local models. Choose the option that fits your privacy and cost requirements.

-

Will my code be sent to external servers?

Only if you configure a cloud model. You control what context is shared and can keep everything local by using on‑device or self‑hosted models.

-

Can it work offline?

Yes, with local models and local context sources. Cloud providers require internet access.

-

How do I customize prompts and commands?

Use the extension’s configuration to define system prompts, slash commands, and behaviors tailored to your repository and team standards.

-

Does it handle large monorepos?

Yes, by selecting relevant files and context chunks. Performance and results depend on your model choice and machine resources.