- Home

- Prompt Engineering

- Portkey

Portkey

Open Website-

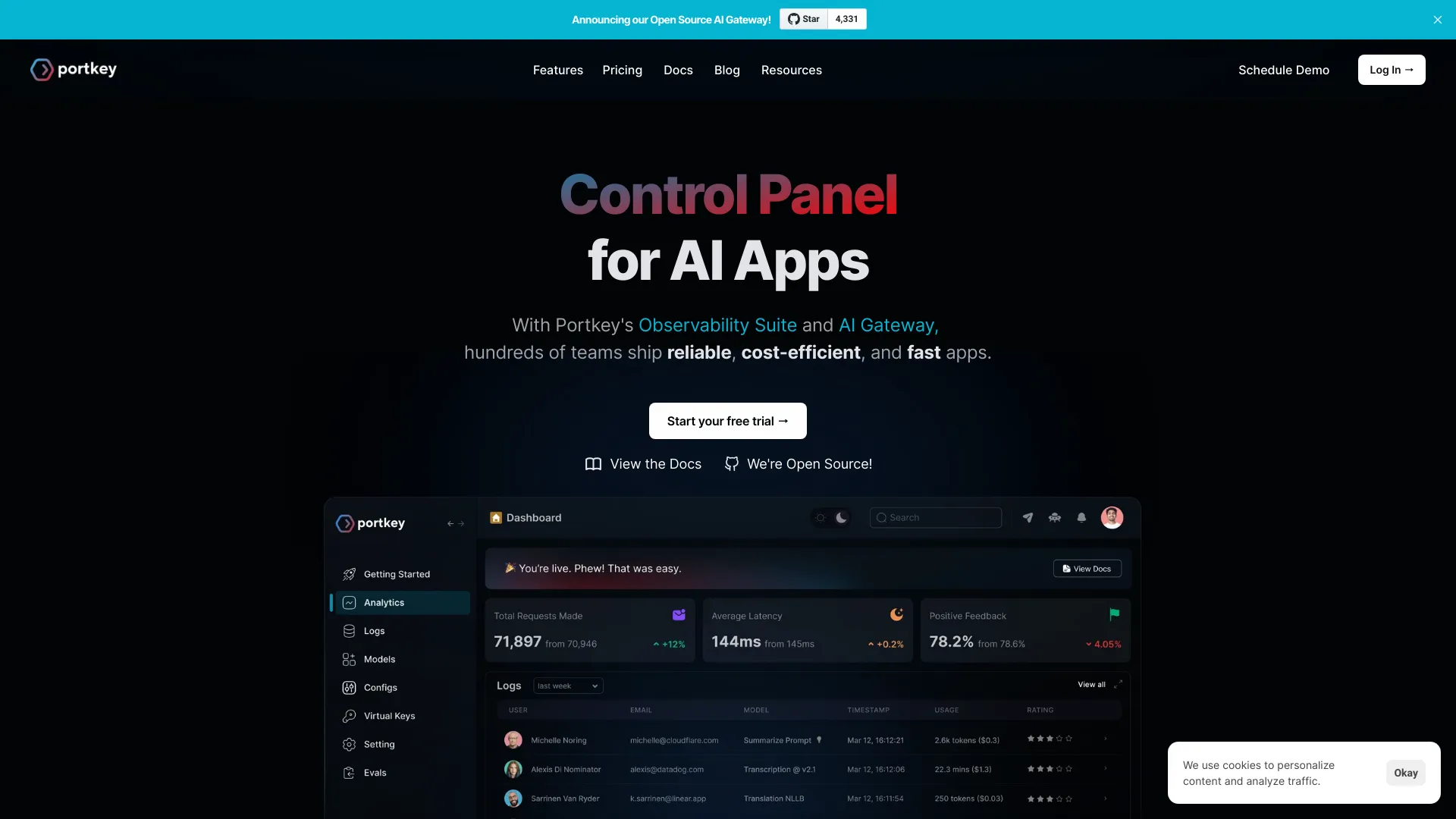

Tool Introduction:3-line AI gateway with guardrails and observability; make agents prod-ready.

-

Inclusion Date:Nov 01, 2025

-

Social Media & Email:

Tool Information

What is Portkey AI

Portkey AI is a platform that helps teams observe, govern, and optimize LLM-powered applications across the organization with just a few lines of code. With an AI Gateway, prompt management, guardrails, and an observability suite, it centralizes how you build and run chatbots, RAG systems, and autonomous agents. Portkey integrates with frameworks like LangChain, CrewAI, and AutoGen to make agent workflows production-ready, and includes an MCP client so agents can safely access real-world tools. The result is more reliable, cost-efficient, and faster AI experiences at scale.

Main Features of Portkey AI

- AI Gateway: Provider-agnostic routing across major LLMs, with retries, timeouts, rate limits, and intelligent fallback to improve reliability and control costs.

- Observability Suite: End-to-end traces, logs, and metrics for prompts, tokens, latency, and errors to diagnose drift and optimize performance.

- Prompt Management: Centralized prompt templates, versioning, variables, and A/B testing to iterate safely without code redeploys.

- Guardrails & Policy: Content moderation, schema validation, PII masking/redaction, and approval workflows to meet governance requirements.

- Caching & Cost Control: Response caching, deduplication, and quotas to cut token spend and stabilize latency.

- Agent Framework Integrations: Native SDKs and bindings for LangChain, CrewAI, AutoGen, and other major agent frameworks.

- MCP Client & Tools: Build agents that can safely call real-world tools and APIs via the Model Context Protocol.

- Access & Security: Role-based access, API key management, and org-wide policies for consistent governance.

Who Can Use Portkey AI

Portkey AI is built for platform teams, ML engineers, and product developers who operate LLM apps in production. It suits startups shipping their first chatbot, enterprises standardizing AI governance, data teams running RAG pipelines, and agent-focused teams that need observability and guardrails. Compliance, security, and operations teams can use its centralized controls to enforce policies while maintaining developer velocity.

How to Use Portkey AI

- Sign up and create a workspace to centralize projects, environments, and API keys.

- Install the SDK or gateway client and connect your preferred LLM providers.

- Wrap your LLM calls through the AI Gateway to enable retries, routing, and cost tracking.

- Define prompts with templates and variables, then version and A/B test them.

- Configure guardrails such as moderation, PII masking, and schema validation.

- Integrate with LangChain, CrewAI, or AutoGen to make agent workflows production-ready.

- Use dashboards to monitor traces, token usage, latency, and error rates; set alerts.

- Iterate: tune routing, prompts, and policies based on observability insights.

Portkey AI Use Cases

- Customer Support Automation: Ship reliable chatbots with guardrails to prevent unsafe responses and reduce handling time.

- RAG Knowledge Assistants: Monitor retrieval quality, prompt performance, and cost across knowledge bases.

- Sales & CX Copilots: A/B test prompts, control provider routing, and keep latency within SLAs.

- Compliance-Sensitive Workflows: Enforce PII masking and content policies organization-wide.

- Autonomous Agents: Productionize agent loops with framework integrations and an MCP client for tool access.

Portkey AI Pricing

Portkey AI typically follows a tiered, usage-based model suitable for individual developers, teams, and enterprises, with advanced features and higher limits available on paid plans. Enterprise options are available for organizations that need custom SLAs and governance. For the latest plan details and any free tier or trial availability, refer to the official Portkey website.

Pros and Cons of Portkey AI

Pros:

- Unified AI Gateway simplifies multi-provider routing and reliability.

- Rich observability for tracing, token spend, and latency optimization.

- Built-in guardrails and governance for safer, compliant deployments.

- Seamless integrations with LangChain, CrewAI, and AutoGen for agents.

- MCP client enables secure real-world tool access for AI agents.

Cons:

- Introduces an additional layer to manage in existing infrastructure.

- Advanced features may require plan upgrades as usage scales.

- Teams fully locked into a single LLM provider may see fewer gateway benefits.

FAQs about Portkey AI

-

Does Portkey AI work with OpenAI, Anthropic, and Google models?

Yes. The AI Gateway is provider-agnostic and supports major LLMs so you can route and fallback across vendors.

-

How is an AI Gateway different from calling an LLM directly?

It adds retries, rate limits, caching, observability, and policy enforcement, improving reliability, cost control, and governance.

-

Can Portkey help with PII and compliance?

Portkey offers guardrails such as PII masking/redaction and moderation so teams can enforce organization-wide policies.

-

Does Portkey integrate with LangChain, CrewAI, and AutoGen?

Yes. It provides integrations that make agent workflows production-ready with tracing and guardrails.

-

What is the MCP client in Portkey?

The MCP client lets AI agents access real-world tools and APIs via the Model Context Protocol with safety controls.