Convai

Open Website-

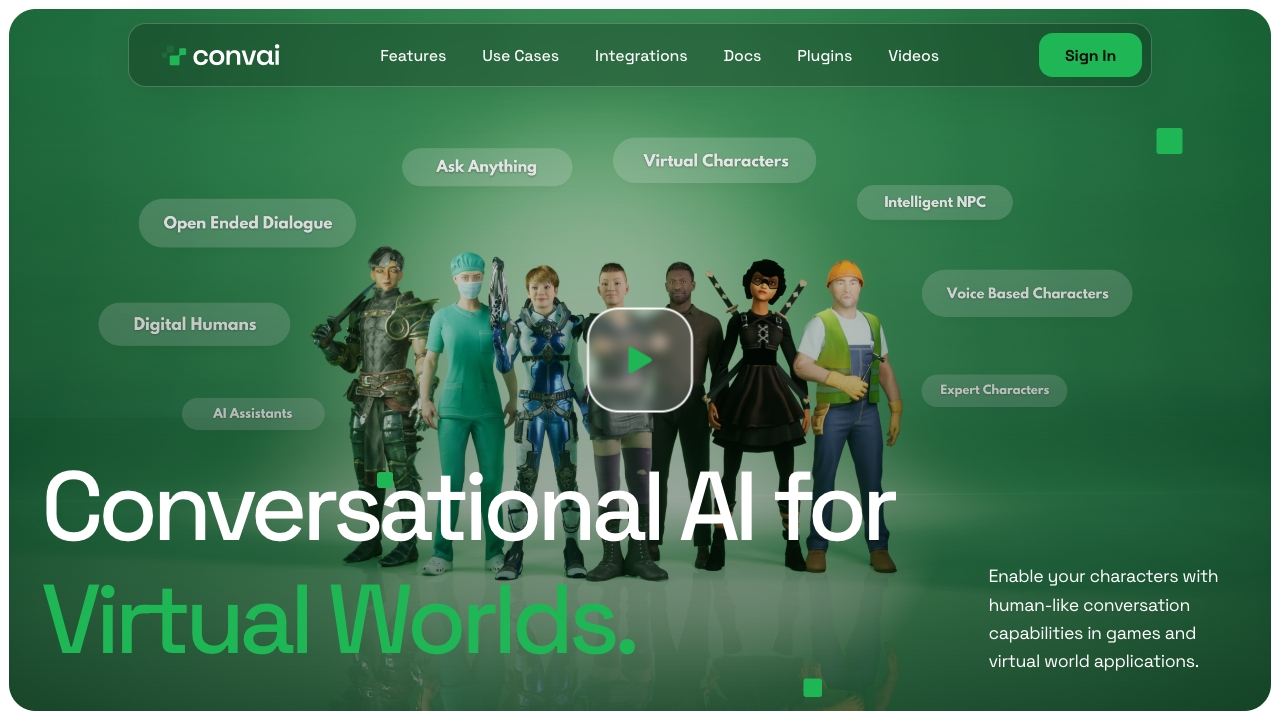

Tool Introduction:Conversational AI APIs for games & XR—real‑time NPC speech, TTS, actions

-

Inclusion Date:Nov 08, 2025

-

Social Media & Email:

Tool Information

What is Convai

Convai is a conversational AI platform that enables developers to add real-time voice-driven characters to games, virtual worlds, and XR experiences. Via streaming APIs and SDKs for Unity and Unreal Engine, it blends automatic speech recognition (ASR), natural language understanding (NLU), response generation, and text-to-speech (TTS) to power interactive NPCs and speech-enabled applications. With perception, memory, and action capabilities, characters can listen, understand, speak, navigate, and interact with their environment for dynamic gameplay and immersive metaverse interactions.

Main Features of Convai

- Streaming Speech Recognition (ASR): Low-latency voice input with voice activity detection and interruptible dialog for natural back-and-forth conversations.

- Language Understanding and Generation: Context-aware NLU and multi-turn response generation for believable NPC dialog and task-oriented interactions.

- Text-to-Speech (TTS): Natural voices with configurable styles, speed, and emotions for lifelike character speech.

- Perception and World Awareness: Characters can perceive objects, locations, and player actions to ground responses in the game world.

- Actions and Navigation: Trigger animations, pathfinding, and object interactions directly from conversational intents.

- Memory and Personality: Persistent memory, knowledge grounding, and character profiles to maintain continuity and unique behavior.

- Unity and Unreal Integration: Ready-to-use SDKs, blueprints, and components that speed up prototyping and production.

- Cloud APIs: Scalable, real-time endpoints for voice-to-voice interactions across games, metaverse spaces, and XR apps.

- Safety and Controls: Configurable filters, content controls, and analytics to manage quality and compliance.

- Multilingual Support: Build speech-enabled experiences for global audiences.

Who Can Use Convai

Convai suits game studios, indie developers, and XR creators who want interactive NPCs and voice-first gameplay. It also helps metaverse platforms, simulation and training providers, educators, and research teams build conversation-based characters, speech-enabled applications, and immersive learning scenarios without building complex speech AI pipelines from scratch.

How to Use Convai

- Sign up and create a project in the Convai console.

- Install the SDK or plugin for Unity or Unreal Engine.

- Create a character profile, defining personality, role, and knowledge sources.

- Configure ASR, NLU, and TTS settings, including language and voice style.

- Bind perception data (objects, locations, player state) to enable world grounding.

- Map intents to in-game actions, animations, and navigation behaviors.

- Implement event hooks for starting/stopping conversations and handling interruptions.

- Test latency and dialog flow in-editor; refine prompts, memory, and safety filters.

- Optimize audio input/output and lip-sync; package and deploy to target platforms.

Convai Use Cases

Studios use Convai to power open-world NPCs that understand player intent and react to game state in real time. XR teams build hands-free training and simulation scenarios with voice guidance and interactive characters. Metaverse creators add conversational greeters, guides, and shop assistants to virtual spaces. Educational apps use speech-enabled tutors and role-play scenarios to enhance engagement and learning outcomes.

Pros and Cons of Convai

Pros:

- End-to-end voice pipeline (ASR, NLU, generation, TTS) with low-latency streaming.

- World-aware characters with perception, memory, and action mapping.

- Native Unity and Unreal Engine integration for faster development.

- Interruptible, multi-turn dialogue for natural conversations.

- Scalable cloud APIs and multilingual capability.

Cons:

- Requires reliable internet connectivity for best real-time performance.

- Latency can vary on constrained devices or networks.

- Integration effort needed to wire actions, navigation, and perception data.

- Usage at scale may impact budgets depending on traffic and voice minutes.

FAQs about Convai

-

Does Convai work with both Unity and Unreal Engine?

Yes. Convai provides SDKs and components for Unity and Unreal to speed up integration.

-

Can characters perform actions based on conversation?

Characters can trigger animations, navigation, and object interactions mapped from intents and context.

-

Is real-time voice supported end to end?

Convai supports streaming ASR and TTS for low-latency, voice-to-voice interactions.

-

Can I ground characters in my game’s knowledge?

Yes. You can attach knowledge bases and memory so characters respond with game-specific context.

-

Is multilingual speech available?

Convai supports multiple languages for recognition and synthesis to reach global audiences.