- Home

- AI Developer Tools

- Zeabur

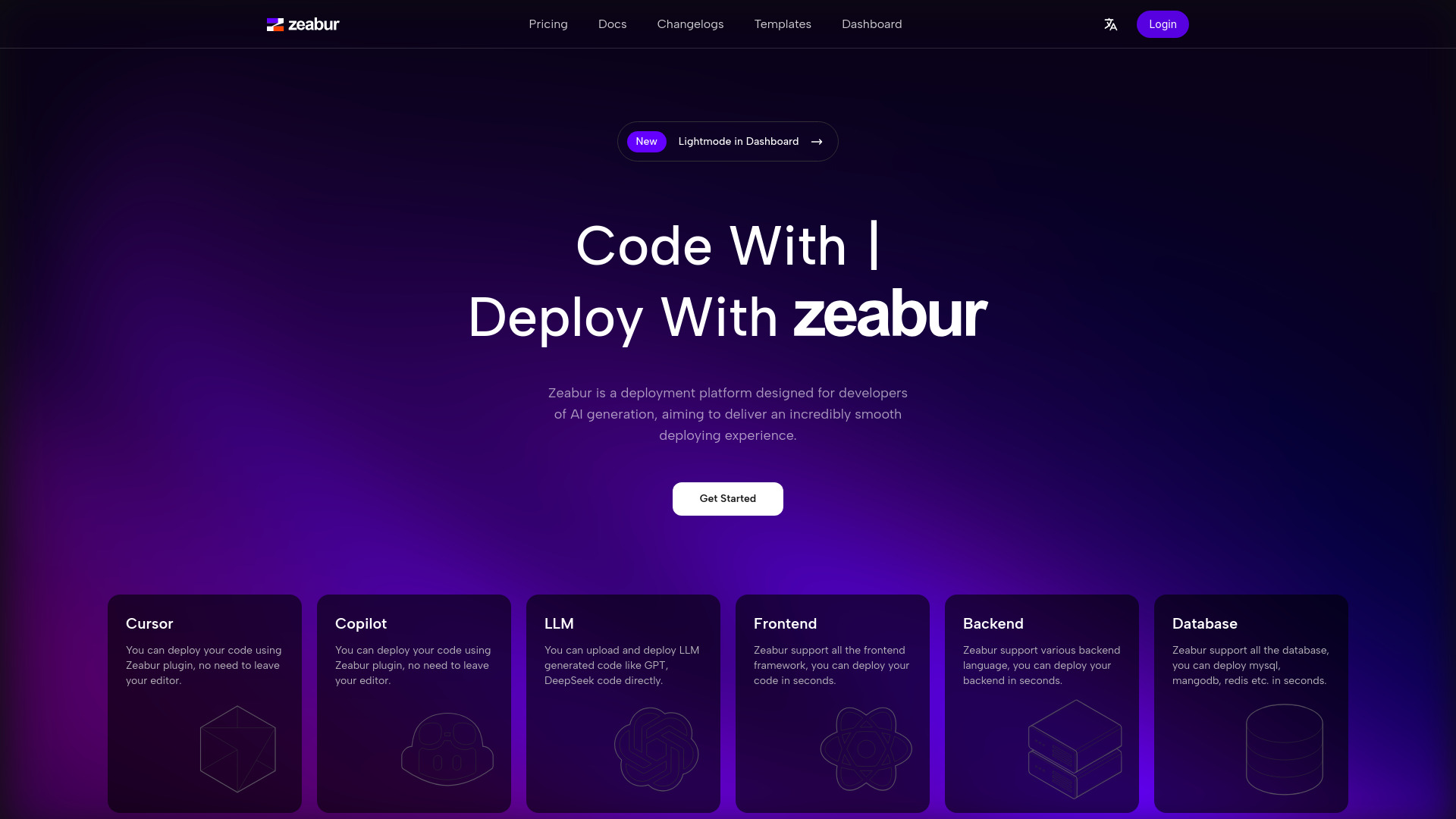

Zeabur

Open Website-

Tool Introduction:Zeabur AI lets you ship AI apps fast across stacks with autoscale and CI/CD

-

Inclusion Date:Oct 28, 2025

-

Social Media & Email:

Tool Information

What is Zeabur AI

Zeabur AI is a cloud deployment platform built for the AI era, giving teams a smooth path from code to production. It supports diverse back-end languages, modern front-end frameworks, and popular databases, so you can launch code, LLM‑generated code, APIs, web apps, and data stores in seconds. With automatic code analysis, service aggregation, CI/CD, auto-scaling, and pay‑as‑you‑go pricing, Zeabur reduces DevOps overhead. Integrated capabilities—shared clusters, server binding, VPS hosting, a template marketplace, object storage, and domain, variable, and file management—streamline delivery end to end.

Zeabur AI Main Features

- Automatic code analysis: Detects frameworks and runtime needs to generate sensible deployment defaults.

- Fast multi-stack support: Runs various back-end languages, front-end frameworks, and databases in one place.

- Deploy LLM-generated code: Seamlessly launch AI-assisted code alongside traditional services.

- Service aggregation: Connect frontends, backends, and databases into coherent services with minimal wiring.

- CI/CD integration: Trigger builds and deployments directly from your repository workflows.

- Auto-scaling: Scale up or down based on demand to balance performance and cost.

- Pay-as-you-go: Pay only for the compute, storage, and bandwidth you actually use.

- Shared clusters: Reduce costs by running workloads on shared infrastructure when appropriate.

- Server binding and VPS hosting: Choose between shared resources or dedicated VPS for isolation and control.

- Template marketplace: Start quickly with curated templates for common stacks and architectures.

- Object storage: Store and serve assets, models, and artifacts reliably.

- Environment variable management: Centralize secrets and configuration for all services.

- Domain and file management: Map custom domains and manage project files in one platform.

Who Should Use Zeabur AI

Zeabur AI suits developers and teams who want rapid, reliable deployments without heavy DevOps overhead. It fits AI product builders shipping LLM-backed APIs, web app teams combining frontends and databases, startups validating MVPs, indie hackers and agencies iterating quickly, and engineering groups standardizing deployment workflows across multiple services.

How to Use Zeabur AI

- Sign up and create a new project for your app or service group.

- Connect your code repository or import an existing project template from the marketplace.

- Let automatic code analysis detect frameworks, build steps, and runtime settings.

- Provision required resources: choose databases, configure object storage, and bind to shared clusters or a VPS.

- Set environment variables and secrets; define any needed service aggregation rules.

- Configure domains and routing for your frontend and API endpoints.

- Trigger the initial build and deploy; verify health checks and basic functionality.

- Enable CI/CD for continuous deployments and set auto-scaling policies.

Zeabur AI Industry Use Cases

A SaaS startup launches an LLM-powered customer support API, deploying backend services, a React dashboard, and a managed database in minutes. An agency ships a multi-tenant marketing site, using templates, shared clusters for cost control, and domain management for client subdomains. A data team hosts inference endpoints and model assets via object storage, binding to a VPS for stronger isolation and predictable performance.

Zeabur AI Pricing

Zeabur AI uses a pay-as-you-go model, charging based on consumed resources such as compute, memory, storage, and bandwidth. Teams can optimize spend with shared clusters for economical workloads or select VPS hosting for dedicated resources. Exact costs depend on usage and chosen configurations; review your resource profile to estimate monthly spend.

Zeabur AI Pros and Cons

Pros:

- Fast from code to production with automatic analysis and sensible defaults.

- Unified support for backends, frontends, and databases.

- Designed for AI workflows, including LLM-generated code.

- Service aggregation simplifies multi-service architectures.

- CI/CD and auto-scaling built into the deployment flow.

- Cost control via pay-as-you-go and shared clusters; flexibility with VPS.

- Integrated object storage, domain, variable, and file management.

Cons:

- As with any platform, potential vendor lock-in for complex setups.

- Advanced configurations may require a learning curve.

- Costs can increase with sustained high traffic or large-scale workloads.

- Less granular control than bespoke infrastructure for niche runtimes.

- Compliance or data residency needs may dictate VPS or external services.

Zeabur AI FAQs

-

What languages and frameworks does Zeabur AI support?

It supports various back-end languages, modern front-end frameworks, and popular databases, enabling full-stack deployments in one platform.

-

Can I deploy LLM-generated code?

Yes. Zeabur AI is designed to handle both traditional codebases and LLM-generated code with the same deployment workflow.

-

Does Zeabur AI provide auto-scaling?

Yes. You can configure auto-scaling to match traffic demand and optimize costs.

-

How does service aggregation help?

It connects frontends, backends, and databases into a cohesive service, reducing manual wiring and deployment complexity.

-

Can I host databases on Zeabur AI?

Yes. You can deploy and manage databases alongside your application services.

-

How is pricing structured?

Pricing is pay-as-you-go, based on actual resource consumption. You can use shared clusters for cost efficiency or a VPS for isolation.