- Home

- Prompt Engineering

- Vellum

Vellum

Open Website-

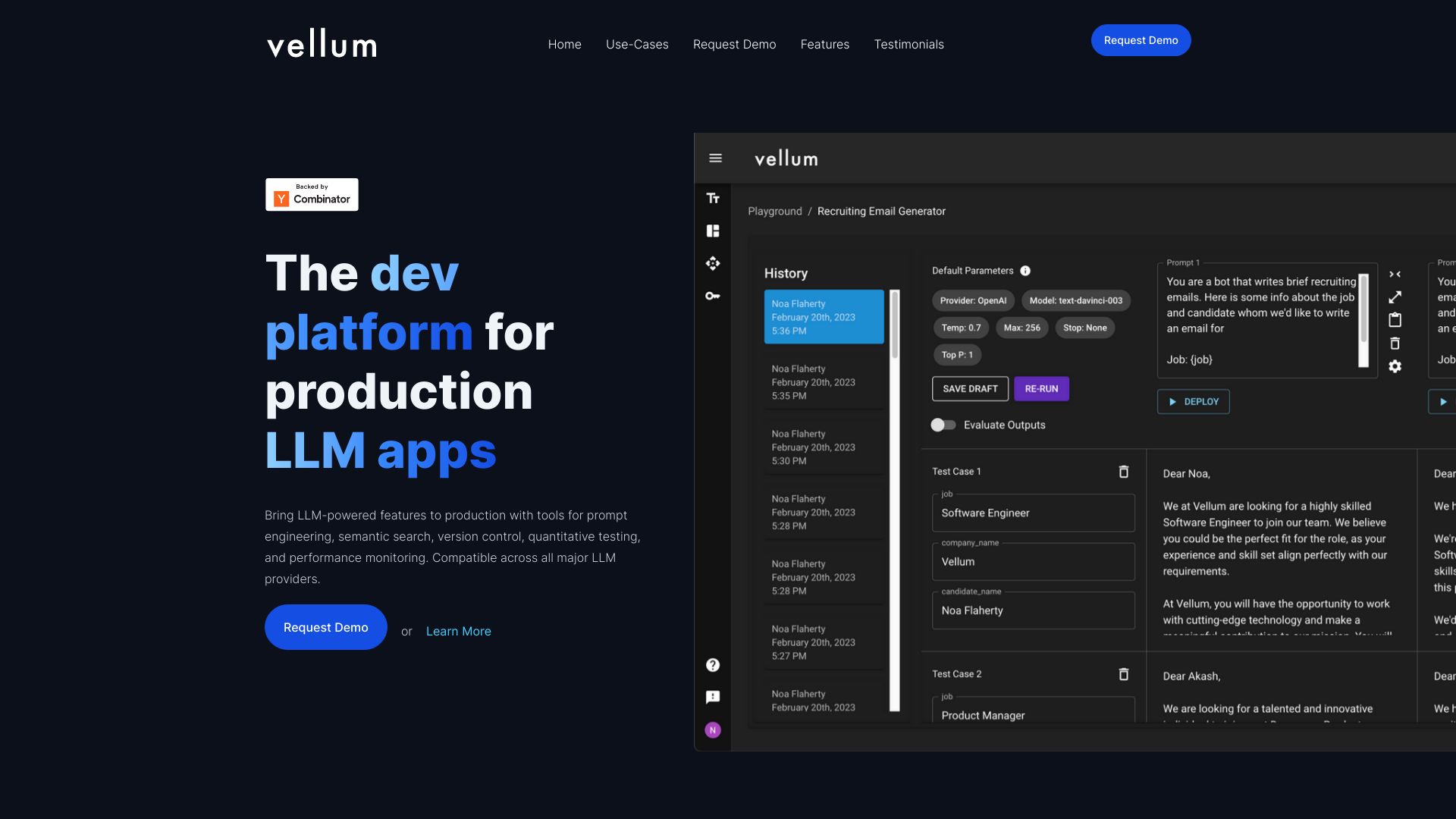

Tool Introduction:Design, test, and deploy AI features with visual workflows and tracking.

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

What is Vellum AI

Vellum AI is a platform that helps teams move AI features from early prototypes to reliable, production-grade systems. It unifies experimentation, prompt engineering, evaluation, deployment, monitoring, and collaboration in one place. With a visual workflow builder, SDK, retrieval UIs and APIs, one-click deployment, and observability tools to track AI decisions, Vellum streamlines the full lifecycle of LLM applications. Product and engineering teams can iterate faster, compare approaches with measurable metrics, and ship features that are traceable, reproducible, and easier to maintain.

Vellum AI Key Features

- Visual workflow builder: Design and orchestrate LLM pipelines, branching logic, and data flows without boilerplate code.

- Prompt engineering tools: Create, version, and refine prompts with variables, templates, and structured inputs for consistent outputs.

- Evaluation metrics: Run systematic tests to compare prompts, models, and parameters using quantitative and qualitative scoring.

- Retrieval UIs and APIs: Build and test RAG-style experiences with built-in retrieval components and endpoints.

- SDK and integrations: Programmatic control to embed Vellum workflows into your application stack.

- One-click deployment: Promote evaluated workflows to production with stable endpoints and configuration management.

- Observability and monitoring: Track model choices, prompts, inputs/outputs, and decisions for debugging and governance.

- Collaboration: Share experiments, standardize best practices, and coordinate across product, data, and engineering teams.

Who Is Vellum AI For

Vellum AI suits product managers, ML/AI engineers, data scientists, and prompt engineers building LLM-powered features such as chat assistants, content generation, classification, extraction, and RAG. It is valuable for startups validating ideas, growth teams scaling prototypes, and enterprises that need evaluation, reproducibility, and observability to operate AI safely in production.

How to Use Vellum AI

- Create a workspace and connect model providers or your preferred SDK setup.

- Define goals and test cases; import sample inputs and expected outcomes for evaluation.

- Design your pipeline in the visual workflow builder, wiring prompts, tools, and retrieval steps.

- Engineer prompts with variables and constraints; parameterize models and temperature settings.

- Add retrieval using the provided UIs/APIs if your use case needs enterprise knowledge or RAG.

- Run evaluations to compare alternatives against metrics and review outputs side by side.

- Iterate based on results; version winning configurations for traceability.

- Deploy with one click to generate a production-ready endpoint or integrate via the SDK.

- Monitor logs, prompts, and outputs in observability dashboards; refine as real data flows in.

Vellum AI Industry Use Cases

In customer support, teams can build retrieval-augmented agents that pull policies and product docs to reduce escalations. E-commerce can deploy classification and enrichment workflows for catalog data. In fintech, evaluation tools help harden underwriting or KYC assistants before launch. SaaS platforms can standardize prompt templates and monitor content generation quality across markets. Internal ops teams can deploy summarization and routing pipelines with end-to-end traceability.

Vellum AI Pros and Cons

Pros:

- Unified workflow from experimentation to production, reducing tool fragmentation.

- Structured prompt engineering and evaluation for measurable quality improvements.

- One-click deployment with stable endpoints and version control for reproducibility.

- Deep observability to debug prompts, model choices, and outputs in context.

- Collaboration features that help teams share, review, and standardize best practices.

Cons:

- Learning curve for teams new to LLM ops and structured evaluations.

- Requires integration effort to align with existing data sources and services.

- Operational costs may grow with scale, evaluations, and production traffic.

- Platform reliance can introduce migration overhead if switching later.

Vellum AI FAQs

-

What kinds of models can I use with Vellum AI?

Vellum AI is designed to work with major LLM providers and custom setups via its SDK, so you can choose models that fit your use case and governance needs.

-

Can I build retrieval-augmented generation (RAG) workflows?

Yes. The platform includes retrieval UIs and APIs to incorporate enterprise knowledge into your pipelines and evaluate end-to-end performance.

-

How does evaluation work?

You can define test cases and metrics, then compare prompts, models, and parameters. Results help you select configurations that best meet quality targets.

-

How do I deploy to production?

After selecting a validated workflow, use one-click deployment to expose a stable endpoint or integrate programmatically through the SDK.

-

What monitoring is available after launch?

Observability tools let you trace prompts, inputs, outputs, and model decisions to debug issues, track drift, and guide ongoing improvements.