- Home

- AI Dubbing

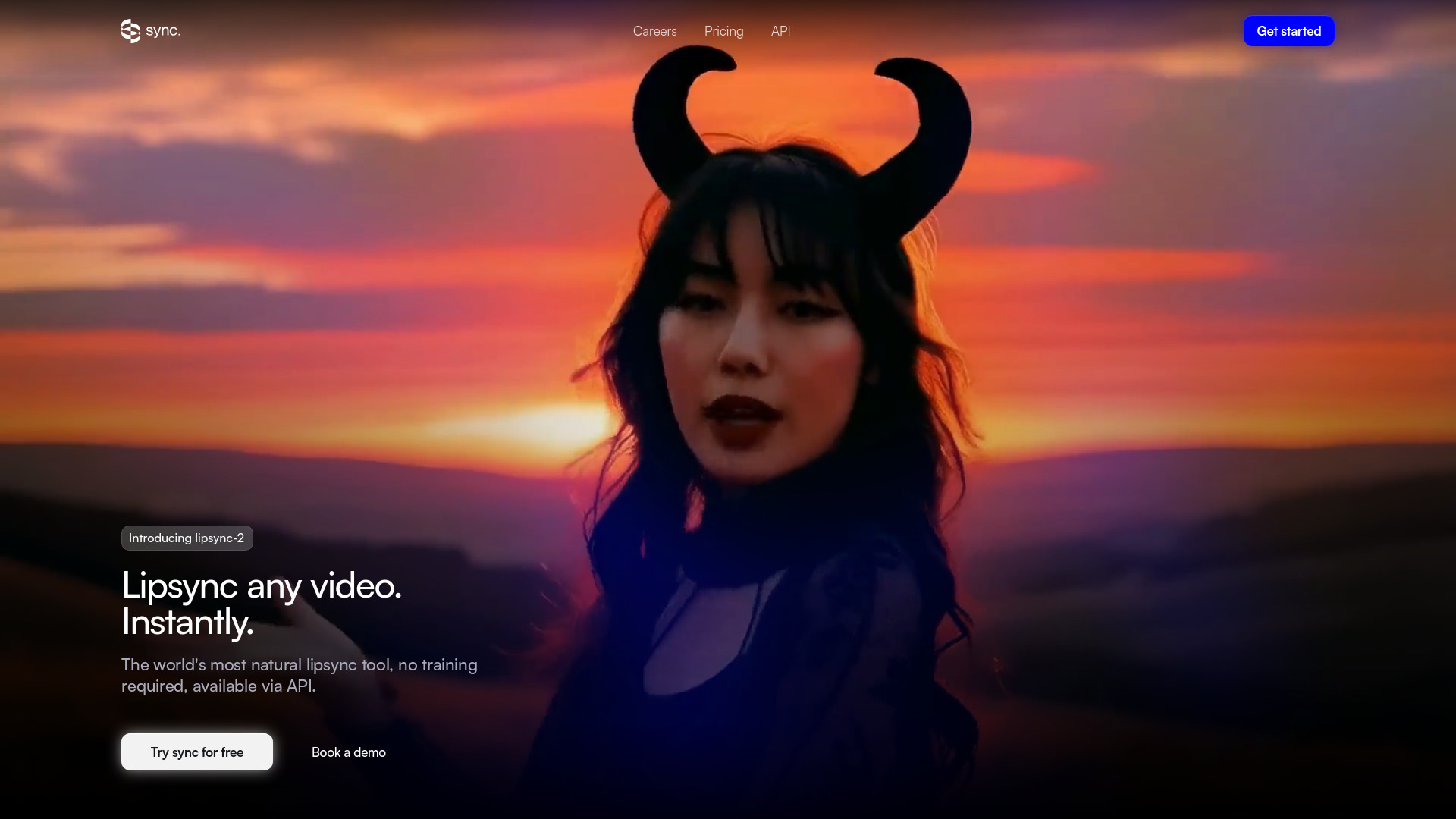

- sync so

sync so

Open Website-

Tool Introduction:Real-time AI video lip sync for any audio or text, plus translation & API.

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

What is sync so AI

sync so AI is an AI video lip-sync editor from the founders of Wav2Lip. It lets you match a speaker’s mouth movements to any audio track or typed text, producing photorealistic, frame-accurate alignment in real time. With seamless translation and multilingual dubbing, it helps creators localize videos for global audiences without reshoots. A developer-friendly API enables programmatic reanimation, batch processing, and human-understanding features, so teams can create, correct, and scale on-screen dialogue with speed, control, and consistency.

sync so AI Main Features

- Real-time lip sync: Generate accurate mouth movements live, enabling instant previews and faster edits.

- Audio or text input: Sync videos to uploaded audio or typed text for flexible dialogue replacement and dubbing.

- Seamless translation: Localize content with multilingual lip-sync alignment for global reach and consistent timing.

- High-fidelity reanimation: Preserve identity and expressions while updating speech for natural-looking results.

- API access: Automate workflows, batch-process clips, and integrate lip-sync, reanimation, and human-understanding into apps and pipelines.

- Editing efficiency: Fix misreads, update scripts, or repurpose videos without reshooting, reducing cost and turnaround.

- Creator-to-enterprise scalability: Suitable for quick social edits or large-scale localization projects.

Who Should Use sync so AI

Ideal for video editors, creators, and marketers who need fast dialogue replacement, multilingual dubbing, or content localization. Helpful for e-learning teams updating courses, product and support teams standardizing tutorials, and studios adapting content for international audiences. Developers and platform builders can use the API to add lip-sync and video reanimation to automated media workflows.

How to Use sync so AI

- Sign up and open a project, then upload your source video.

- Add an audio track or enter text that you want the speaker to say.

- Select language and translation options if you need multilingual output.

- Adjust sync and quality settings, then preview real-time results.

- Refine timing or segments as needed, and render the final video.

- Use the API to batch-process or integrate lip-sync into your app or pipeline.

sync so AI Industry Use Cases

Media and entertainment teams dub trailers and short-form content without studio sessions. E-learning providers update lessons across languages while keeping instructors on screen. Marketing teams repurpose creator videos for different regions. SaaS companies align onboarding and support videos with new product messaging. Research and accessibility projects use the API to reanimate and analyze human speech patterns in video at scale.

sync so AI Pros and Cons

Pros:

- Accurate, real-time AI lip sync derived from Wav2Lip expertise.

- Works with audio or text for flexible dialogue replacement and dubbing.

- Seamless translation for multilingual, global distribution.

- API for automation, batch processing, and developer integrations.

- Reduces reshoots and speeds up localization workflows.

Cons:

- Output quality depends on source video clarity, lighting, and framing.

- Challenging angles or occlusions may require manual adjustments.

- Processing at scale can incur compute costs and longer render times.

- Language coverage and voice options vary; verify specific needs.

- Requires proper consent and rights when altering real people in video.

sync so AI FAQs

-

Does sync so AI work in real time?

Yes. It offers real-time lip-sync preview, with latency depending on project settings and infrastructure.

-

Can I lip-sync to typed text?

Yes. You can input text and generate aligned mouth movements; you may pair it with an audio track or text-to-speech as your workflow requires.

-

Does it support multiple languages?

It is designed for multilingual translation and dubbing. Check the official documentation for current language support.

-

Is there an API for developers?

Yes. The API enables programmatic video reanimation, batch processing, and human-understanding features.

-

What about rights and consent?

Only use content you have permission to edit, and follow local laws and platform policies when reanimating real people in video.