OpenRouter

Open Website-

Tool Introduction:Free SDXL image generator online, 9M+ prompts, privacy-first.

-

Inclusion Date:Oct 21, 2025

-

Social Media & Email:

Tool Information

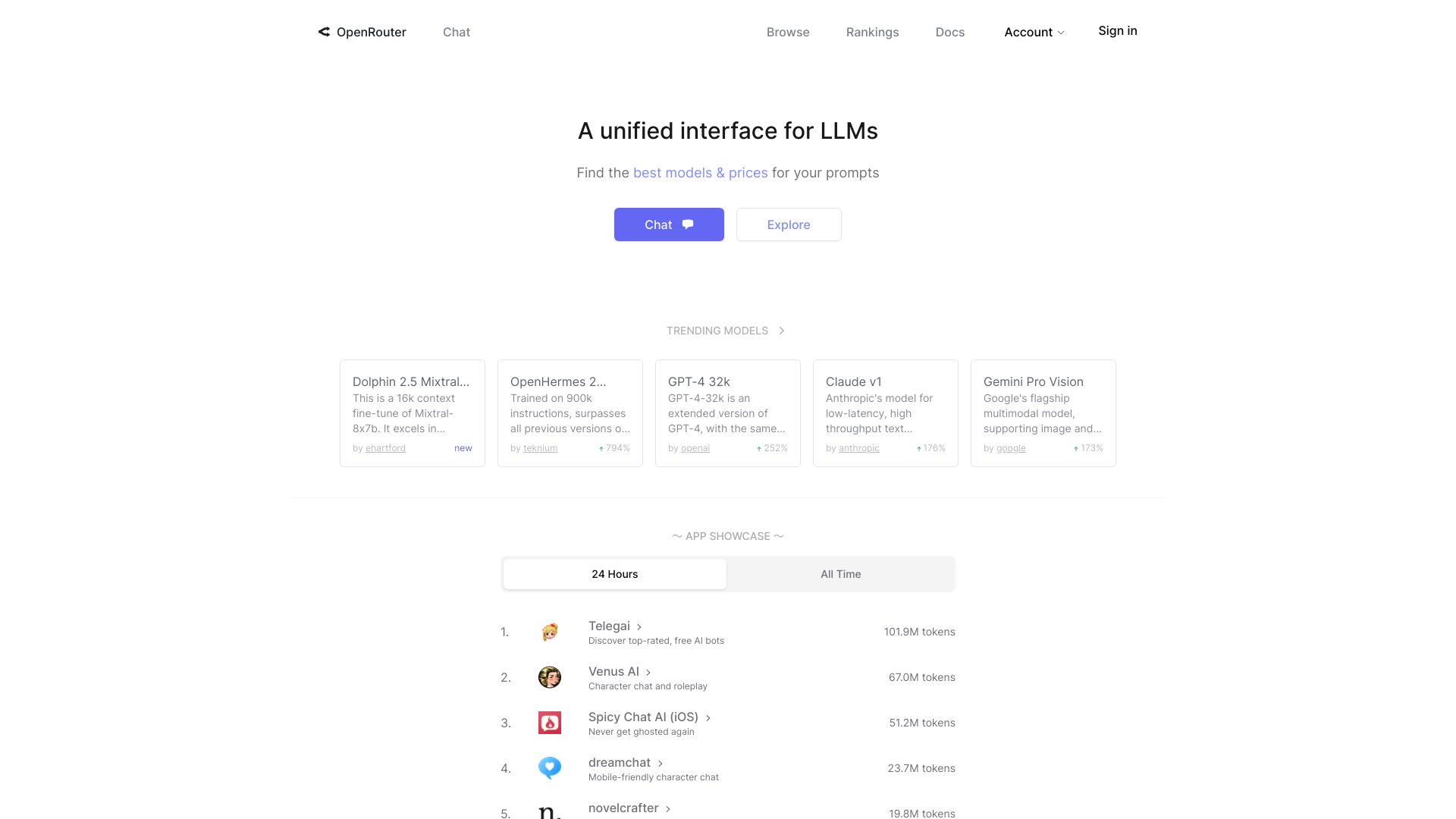

What is OpenRouter AI

OpenRouter AI is a unified interface for Large Language Models (LLMs) that lets developers access major models through a single, OpenAI-compatible API. It centralizes pricing and routing so you can select the right model per prompt and tune for cost, quality, and latency. Backed by distributed infrastructure, it improves availability and throughput. With routing curves, model routing visualization, price/performance optimization, and custom data policies, OpenRouter streamlines multi-model development—offering better prices, resilient uptime, and no subscription fees.

OpenRouter AI Main Features

- OpenAI-compatible API: Drop-in compatibility with the OpenAI SDK to reduce integration effort and accelerate migration.

- Unified model access: Query a wide range of LLMs through one gateway, simplifying provider management and versioning.

- Routing curves: Define how traffic is distributed across models to balance speed, quality, and cost.

- Model routing visualization: Observe how requests flow, compare outcomes, and iteratively refine routes.

- Distributed infrastructure: Higher availability and stable uptime through multi-region, provider-agnostic architecture.

- Price/performance optimization: Compare model prices and tune prompts or routing to control spend.

- Custom data policies: Configure data handling to align with privacy and compliance needs.

- No subscription fees: Pay per usage at model-specific rates without mandatory monthly plans.

Who Should Use OpenRouter AI

OpenRouter AI suits developers, startups, and enterprises that need a vendor-agnostic LLM layer. It benefits product teams running A/B tests, MLOps engineers managing multi-model deployments, researchers benchmarking models, and platforms that must balance accuracy, latency, and cost across diverse workloads.

How to Use OpenRouter AI

- Create an account and generate an API key in your OpenRouter dashboard.

- Point your client to the OpenRouter endpoint and use the provided API key; keep the OpenAI-compatible request format.

- Select a model from the catalog and set the model identifier in your requests.

- Optionally configure routing curves or fallbacks to distribute traffic across multiple models.

- Send test prompts, then review latency, quality, and cost metrics.

- Use routing visualization to refine model selection and adjust traffic weights.

- Set data handling preferences to match your organization’s policy.

- Move to production with monitoring, retries, and periodic model evaluations.

OpenRouter AI Industry Use Cases

- Customer support automation that routes common queries to fast, low-cost models while escalating complex tickets to higher-accuracy models. - Content generation platforms that dynamically switch between creative and deterministic models based on task and budget. - Research labs benchmarking multiple LLMs through one API to compare reasoning quality and latency. - Enterprise apps standardizing on a single gateway to improve uptime and enforce consistent data policies across vendors.

OpenRouter AI Pricing

OpenRouter AI has no subscription fees. It uses usage-based pricing with model-specific rates shown in the catalog. You pay per request or per token depending on the model’s pricing. Total cost depends on the models you choose, traffic volume, and your routing strategy.

OpenRouter AI Pros and Cons

Pros:

- Unified, OpenAI-compatible API simplifies multi-model integration.

- Routing curves and visualization enable data-driven optimization.

- Distributed infrastructure improves availability and resilience.

- Transparent model pricing supports cost control.

- Custom data policies help align with privacy and compliance needs.

- No subscription; pay only for what you use.

Cons:

- Gateway dependency introduces an additional network hop and potential variability in latency.

- Feature support may vary by model; not all providers offer identical capabilities.

- Requires initial setup and tuning of routing strategies to realize full benefits.

- Organizations with strict vendor requirements may prefer direct provider contracts.

OpenRouter AI FAQs

-

Q1: Is OpenRouter compatible with the OpenAI SDK?

Yes. It uses an OpenAI-compatible API, allowing most clients to work with minimal configuration changes.

-

Q2: How does routing improve performance and cost?

Routing curves let you split traffic across models, sending simple prompts to cheaper models and complex ones to higher-quality models, optimizing both latency and spend.

-

Q3: Does OpenRouter store my data?

Data handling is configurable with custom policies. Set retention and sharing preferences to meet your organization’s requirements.

-

Q4: What does pricing look like?

Pricing is usage-based and model-specific. You are billed per request or per token according to each model’s listed rates.

-

Q5: Can I use OpenRouter in production?

Yes. Its distributed infrastructure is designed for higher availability, and observability features help monitor reliability and optimize routes.

-

Q6: Which models can I access?

OpenRouter aggregates a range of major LLMs. Consult the model catalog to see current availability and capabilities.