- Home

- Log Management

- Middleware

Middleware

Open Website-

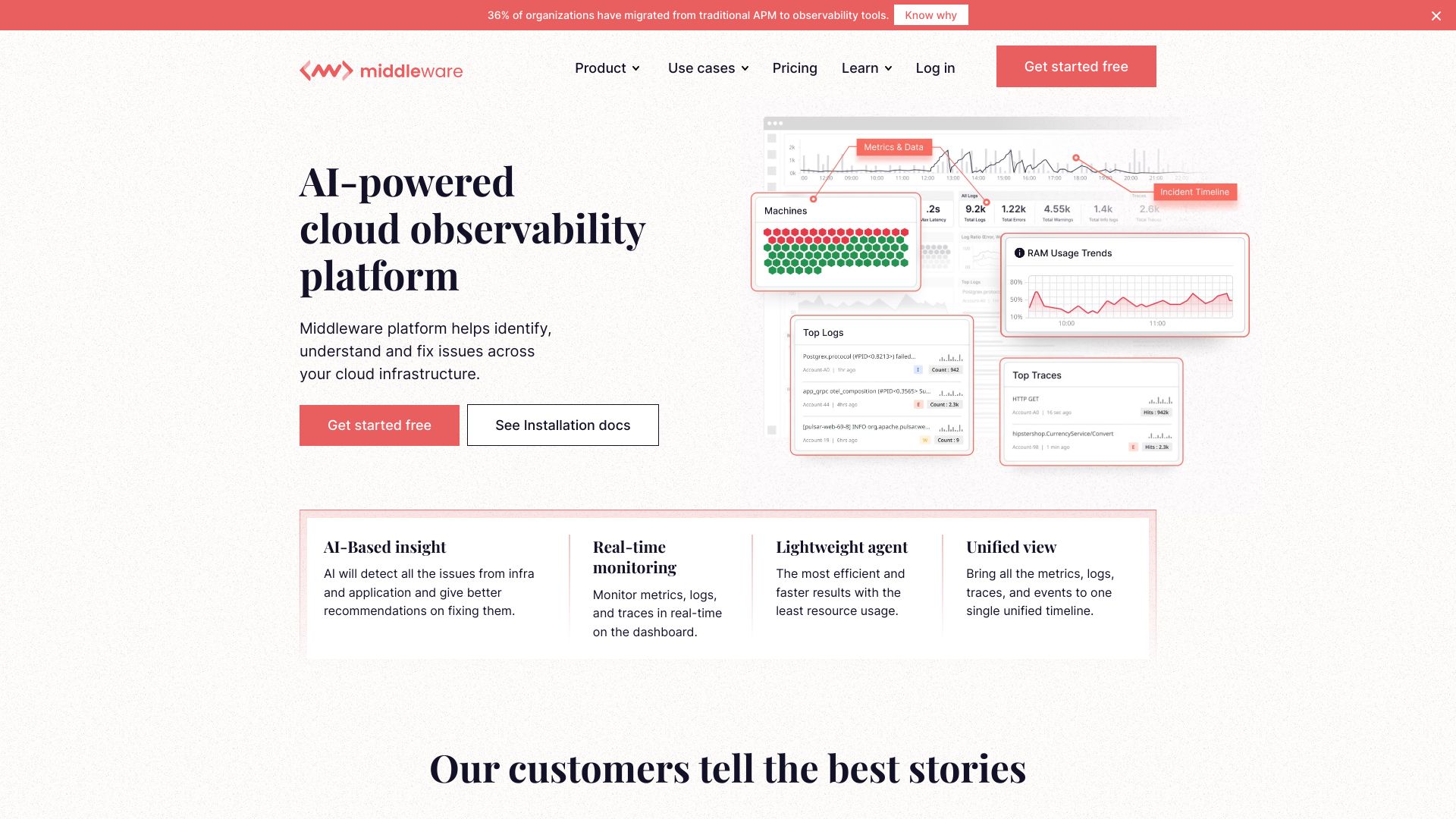

Tool Introduction:Middleware AI observability for infra, logs, APM—real-time AI alerts.

-

Inclusion Date:Nov 09, 2025

-

Social Media & Email:

Tool Information

What is Middleware AI

Middleware AI is a full-stack cloud observability platform that unifies infrastructure monitoring, log management, APM, and distributed tracing to give teams end-to-end visibility across modern systems. It applies AI-driven anomaly detection and correlation to surface issues quickly, reduce MTTR, and guide root-cause analysis. With real-time data access, live querying, and customizable dashboards, teams can monitor clouds, containers, and microservices in one place. Strong security, role-based access, and governance help protect data while enabling efficient, reliable operations.

Main Features of Middleware AI

- Unified observability: Centralizes metrics, logs, traces, APM, and infrastructure health in a single platform.

- AI-powered insights: Anomaly detection, noise reduction, and correlation to speed up troubleshooting and incident response.

- Real-time analytics: Live tail for logs, streaming metrics, and instant dashboards for rapid feedback.

- Infrastructure monitoring: Visibility across cloud services, VMs, containers, and Kubernetes clusters.

- Distributed tracing: Track requests across microservices to analyze latency, errors, and dependencies.

- OpenTelemetry-friendly: Flexible data ingestion and easy instrumentation for apps and services.

- Alerting and SLOs: Smart alerts, thresholds, and service-level objectives that integrate with on-call workflows.

- Dashboards and queries: Custom charts, drill-downs, and powerful search to explore logs and performance data.

- Cost controls: Data retention policies, sampling, and filtering to manage observability spend.

- Security and governance: Role-based access, auditability, and secure data handling for compliance-focused teams.

Who Can Use Middleware AI

Middleware AI is designed for DevOps, SRE, platform engineering, and cloud operations teams that need unified visibility across services. It also helps application developers troubleshoot performance, security and compliance teams monitor sensitive environments, and product managers track release health and reliability. Common use cases include incident response, capacity planning, cost optimization, and continuous delivery validation in cloud-native and hybrid environments.

How to Use Middleware AI

- Connect environments: Link your cloud accounts, clusters, and services to start collecting telemetry data.

- Install agents or SDKs: Deploy lightweight agents and instrument applications using OpenTelemetry where needed.

- Ingest data: Stream metrics, logs, and traces from infrastructure, applications, and third-party services.

- Configure dashboards: Build role-specific views for SREs, developers, and product owners.

- Set alerts and SLOs: Define thresholds, anomaly rules, and service objectives aligned to business impact.

- Investigate issues: Use AI-assisted correlation, log search, and tracing to pinpoint root cause and reduce MTTR.

- Optimize costs: Adjust retention, sampling, and filters based on usage patterns and priorities.

- Harden security: Apply RBAC, control data access, and audit activity for governance.

Middleware AI Use Cases

- Production incident response: Detect anomalies, correlate logs and traces, and resolve outages faster.

- Performance optimization: Identify latency hotspots, slow queries, or service bottlenecks across microservices.

- Kubernetes monitoring: Track cluster health, node/resource utilization, and workload reliability.

- Release validation: Monitor new deployments, compare versions, and guard SLOs with real-time alerts.

- Security observability: Centralize audit logs, enforce access controls, and monitor sensitive workloads.

- Cost and capacity planning: Right-size infrastructure using usage trends and performance data.

Middleware AI Pricing

Pricing commonly reflects factors such as data volume, retention period, enabled features (for example logs, APM, or tracing), and support level. Plans may scale from team to enterprise needs with options to tailor ingestion and retention to budget. For current details, consult the official pricing information and select a plan that aligns with your telemetry volume and compliance requirements.

Pros and Cons of Middleware AI

Pros:

- Single platform for metrics, logs, traces, and APM reduces tool fragmentation.

- AI-driven anomaly detection accelerates triage and root-cause analysis.

- Real-time data access and live log tailing improve feedback loops.

- OpenTelemetry compatibility simplifies instrumentation.

- Granular access controls and governance support secure operations.

- Cost controls and retention policies help manage observability spend.

Cons:

- Learning curve for teams new to observability and tracing concepts.

- High data volumes can increase costs without careful sampling and filtering.

- Alert tuning is required to avoid noise and alert fatigue.

- Instrumentation effort may be needed for legacy or custom stacks.

- Centralizing telemetry may require review of data privacy and compliance policies.

FAQs about Middleware AI

-

Does Middleware AI support OpenTelemetry?

Yes, it is compatible with OpenTelemetry for flexible ingestion and instrumentation.

-

Can it monitor Kubernetes and containerized workloads?

Yes. It provides visibility into clusters, nodes, pods, and services with metrics, logs, and traces.

-

How does the AI detection work?

The platform applies anomaly detection and correlation across metrics, logs, and traces to highlight probable root causes.

-

Is real-time log streaming available?

Yes. You can live tail logs and query streaming data to accelerate investigations.

-

What security controls are included?

Role-based access control, data governance features, and auditability help protect sensitive telemetry and meet policy requirements.

-

Can Middleware AI replace separate APM and logging tools?

It consolidates APM, logging, tracing, and infrastructure monitoring, helping reduce tool sprawl while preserving deep diagnostics.