- Home

- AI Code Assistant

- Coder

Coder

Open Website-

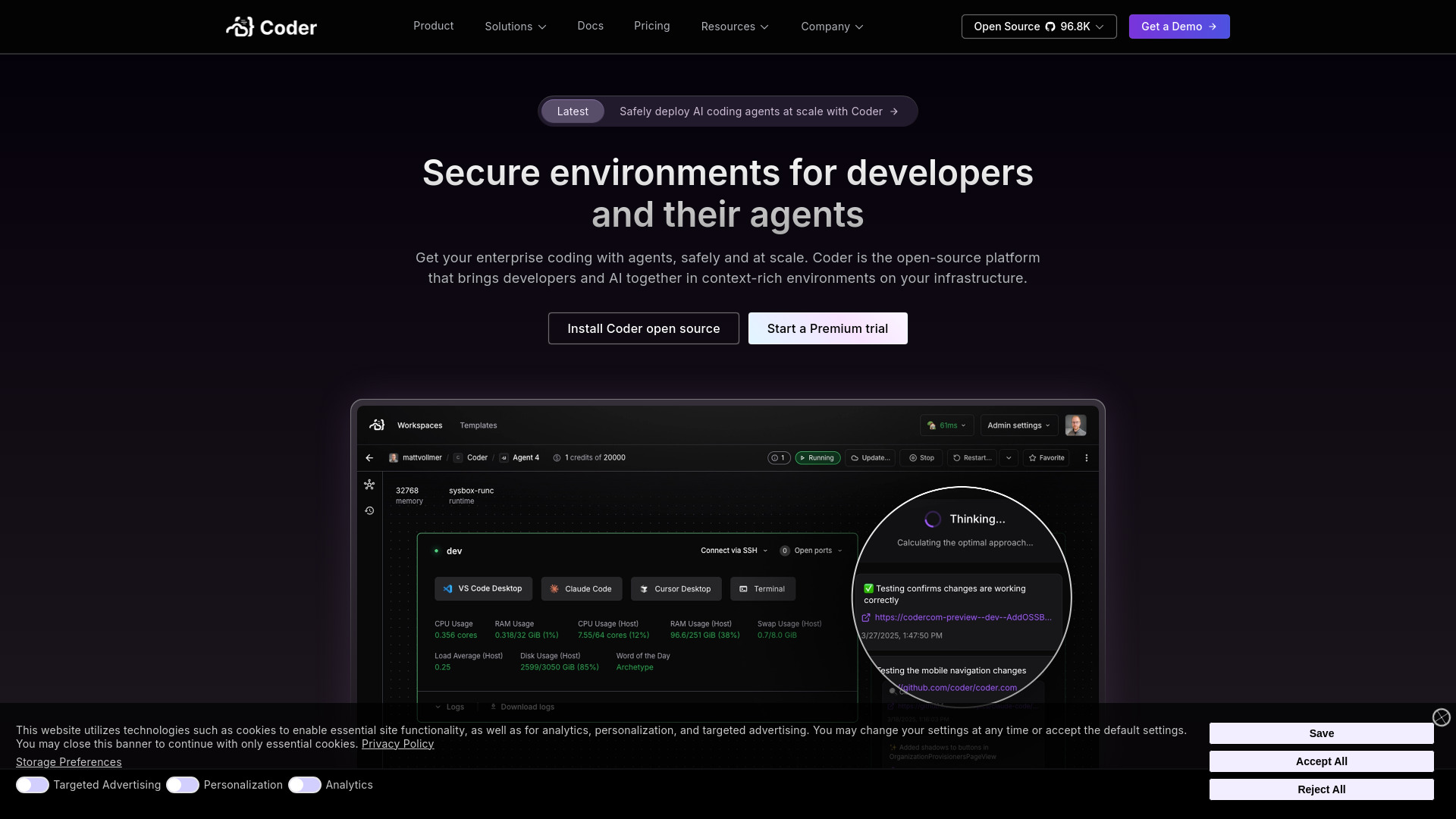

Tool Introduction:Self-hosted cloud dev with AI agents, as-code on your infra, secure.

-

Inclusion Date:Oct 28, 2025

-

Social Media & Email:

Tool Information

What is Coder AI

Coder AI is a self-hosted cloud development environment platform that lets teams run developer workspaces and AI coding agents side by side on their own infrastructure. By provisioning environments as code, it delivers reproducible, secure, and context-rich workspaces from day one. Coder centralizes compute, secrets, and policy while giving engineers fast, IDE-ready access from any device. With strong isolation, auditing, and network controls, organizations can safely scale AI-assisted development across Kubernetes or VMs, including GPU-accelerated workloads.

Coder AI Main Features

- Self-hosted on your infrastructure: Keep source code, build artifacts, and AI activity within your network for data control and compliance.

- Workspaces as code: Define reproducible environments with templates, images, and startup scripts for consistent onboarding and fewer “works on my machine” issues.

- Isolation and security: Per-workspace sandboxing, RBAC, secret management, and network egress controls to deploy agents safely at scale.

- AI agent readiness: Run AI coding agents within scoped workspaces, apply policy guardrails, and monitor actions for accountability.

- IDE and protocol support: Connect via popular workflows such as VS Code, JetBrains Gateway, or SSH for a native developer experience.

- Ephemeral or persistent workspaces: Spin up short-lived sandboxes for experiments or maintain persistent environments for long-running projects.

- GPU and high-performance compute: Schedule GPU-enabled workspaces for AI-assisted coding, testing, or model-adjacent tasks.

- Auditability and compliance: Centralized logs and workspace metadata help meet internal audit and regulatory requirements.

- Cost and performance controls: Autoscaling, quotas, and standardized images reduce idle spend and speed up startup times.

Who Should Use Coder AI

Coder AI suits platform engineering and DevOps teams standardizing secure development environments, software engineers who need fast, consistent workspaces, and security/compliance-led organizations that must keep developer compute and AI activity on-prem or in private clouds. it's also a strong fit for data science and ML teams needing GPU access, as well as regulated industries seeking policy-driven isolation for AI coding agents.

How to Use Coder AI

- Deploy the Coder control plane on Kubernetes or VMs within your cloud or data center.

- Connect compute pools and registries, and configure authentication and role-based access.

- Create workspaces-as-code templates using container images or VM snapshots and define startup scripts.

- Set organization policies for resource limits, network egress, secrets, and agent permissions.

- Developers select a template to provision a workspace and connect with their preferred IDE or SSH.

- Optionally enable AI coding agents inside isolated workspaces with scoped credentials and monitoring.

- Track usage, iterate on templates, and tune autoscaling and GPU allocation as needs evolve.

Coder AI Industry Use Cases

In financial services, teams run tightly isolated workspaces with restricted egress so AI agents can assist without exposing sensitive code or data. Healthcare and life sciences use GPU-enabled environments to accelerate analysis while keeping PHI off public SaaS. Software vendors standardize templates across monorepos, enabling rapid onboarding and safe agent-assisted refactoring. In education and training, ephemeral environments give learners clean, reproducible setups on demand.

Coder AI Pricing

Coder AI is typically deployed as a self-hosted platform. The core is available as open source, with commercial enterprise licensing for advanced capabilities and vendor support. Many organizations start with a proof of concept and engage the vendor for trials and pricing details based on seats and scale.

Coder AI Pros and Cons

Pros:

- Strong isolation and policy controls for safe AI agent deployment.

- Reproducible, provisioned-as-code workspaces reduce setup time and drift.

- Keeps code and data on your infrastructure for compliance and governance.

- GPU-ready and scalable across Kubernetes or VM estates.

- Improves developer velocity with fast, consistent environments.

Cons:

- Requires platform engineering effort to deploy, operate, and maintain.

- Self-hosting introduces infrastructure cost and capacity planning.

- Learning curve for templates, policies, and image management.

- Remote IDE performance depends on network latency and bandwidth.

Coder AI FAQs

-

Q1: How is Coder AI different from managed cloud IDE services?

it's self-hosted, giving you control over data residency, network policy, and security controls while offering a similar fast, IDE-ready developer experience.

-

Q2: Can I safely run AI coding agents in production repos?

Yes—by running agents inside isolated workspaces, scoping credentials, and enforcing egress and action policies, you can contain agent access and audit activity.

-

Q3: Which IDEs are supported?

Developers commonly connect via VS Code, JetBrains Gateway, or SSH-based workflows, enabling a familiar local-like experience.

-

Q4: Does Coder AI support GPUs?

Coder can schedule GPU-backed workspaces so developers and agents can leverage accelerated compute for AI-assisted tasks.

-

Q5: Can it run in air-gapped or private cloud environments?

Yes. As a self-hosted platform, it’s designed to operate on private networks where internet access is tightly controlled or restricted.