- Home

- AI Writing Assistants

- BoltAI

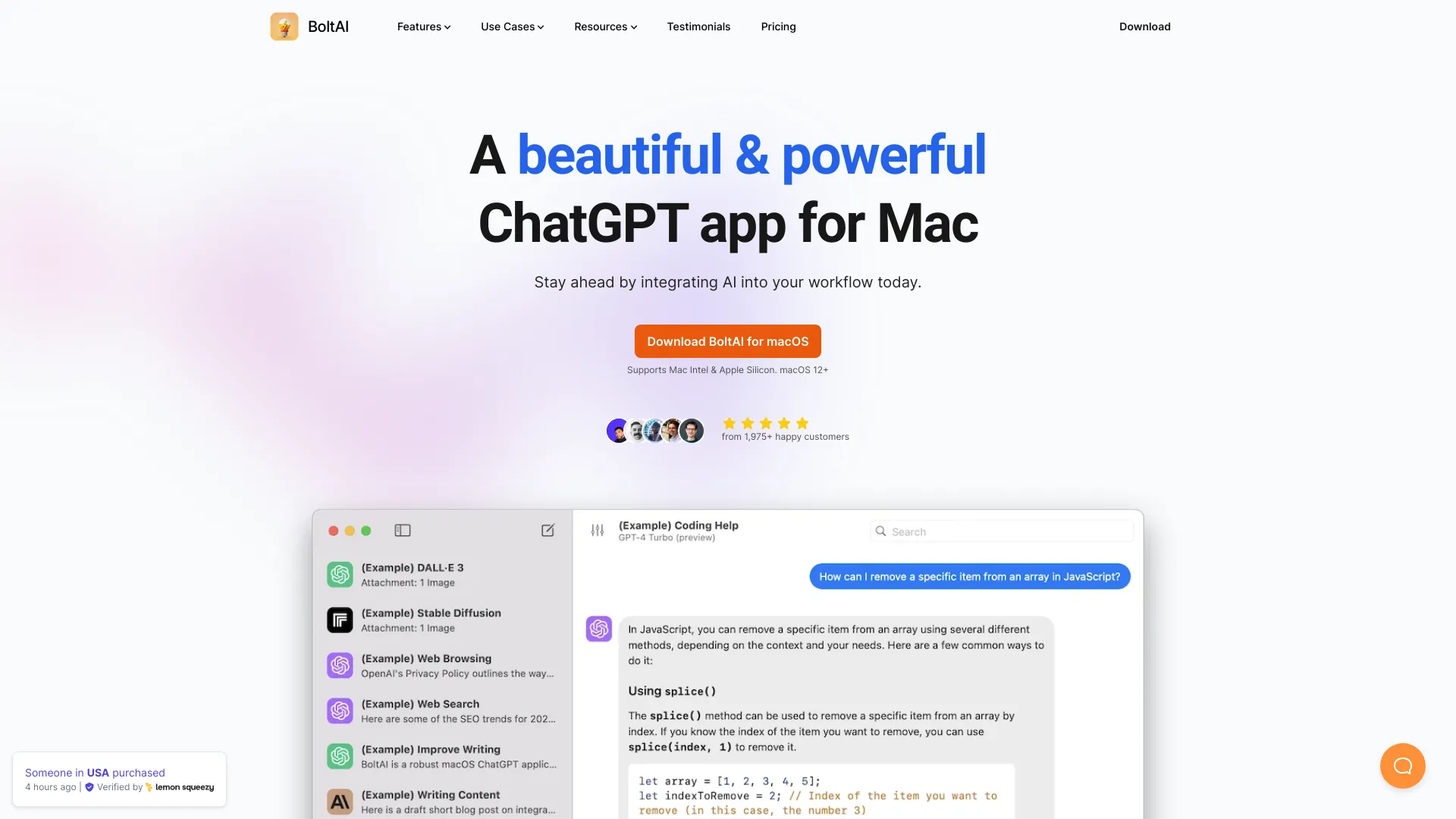

BoltAI

Open Website-

Tool Introduction:Mac-native AI app with OpenAI, Anthropic, Ollama—fast inline tools.

-

Inclusion Date:Oct 31, 2025

-

Social Media & Email:

Tool Information

What is BoltAI

BoltAI is a native, high‑performance AI app for Mac that brings modern language models into everyday work. It connects with OpenAI, Anthropic, Mistral, and local engines like Ollama, so you can chat, generate, review, and automate without leaving your desktop. With an intuitive chat UI, powerful AI commands, and AI Inline for instant in‑app assistance, BoltAI streamlines writing, coding, research, and planning. A built‑in license manager and flexible model choices help teams and solo users boost productivity with minimal setup.

Main Features of BoltAI

- Native macOS performance: Optimized, responsive experience designed specifically for Mac, reducing latency and friction in daily workflows.

- Multi‑model support: Connect to OpenAI, Anthropic, and Mistral, or run local models via Ollama to balance speed, cost, and privacy.

- Intuitive chat UI: Conversational interface for drafting, rewriting, explaining, and brainstorming with context‑aware threads.

- AI commands: Create and reuse prompt templates and quick commands to standardize tasks like summarize, translate, or refactor.

- AI Inline: Access assistance inside your current app to rewrite text, generate replies, or insert code without breaking flow.

- License manager: Manage activations and licenses across devices and teams for easier administration.

- Privacy and control: Choose cloud providers or on‑device inference to keep sensitive content local when needed.

- Flexible workflows: Switch models per chat, tailor system prompts, and fine‑tune outputs for writing, development, and planning.

Who Can Use BoltAI

BoltAI suits professionals who want fast, reliable AI on macOS. Writers and marketers can draft, edit, and repurpose content. Developers can generate code, explain snippets, and review pull requests. Product managers and entrepreneurs can outline specs, summarize research, and plan roadmaps. Customer support and operations teams can craft responses and SOPs. Students and researchers can condense articles and create study notes, all within a focused desktop workflow.

How to Use BoltAI

- Download and install BoltAI on your Mac, then open the app.

- Connect your preferred AI providers (OpenAI, Anthropic, Mistral) or configure local models through Ollama.

- Create a new chat, choose a model, and optionally set a system prompt or select a prompt template.

- Use the chat UI to ask questions or run AI commands; invoke AI Inline to work directly inside other apps.

- Organize threads, save effective prompts as commands, and manage activations in the license manager.

BoltAI Use Cases

Teams use BoltAI to draft blog posts, emails, and landing copy; summarize reports and meeting notes; translate or localize customer communications; generate and refactor code; explain complex functions; produce SQL queries; and outline product specs and roadmaps. With Ollama, sensitive content can be processed on‑device for privacy‑critical workflows in legal, finance, healthcare research, and enterprise operations.

Pros and Cons of BoltAI

Pros:

- Fast, native macOS experience with low friction.

- Supports OpenAI, Anthropic, Mistral, and local models via Ollama.

- AI Inline enables in‑context assistance across apps.

- Reusable AI commands standardize common tasks.

- License manager simplifies device and team administration.

- Option for on‑device processing to improve privacy.

Cons:

- macOS‑only; not available for Windows or Linux.

- Initial setup may require configuring provider keys and local models.

- Cloud model usage costs depend on external providers.

- Running local models can be resource‑intensive on older hardware.

- Learning curve to design effective prompts and command templates.

FAQs about BoltAI

-

Which AI models does BoltAI support?

It supports OpenAI, Anthropic, and Mistral, plus local models through Ollama, allowing flexible performance, cost, and privacy choices.

-

Does BoltAI work offline?

When using local models via Ollama, processing can run on‑device. Cloud providers require an internet connection.

-

What is AI Inline?

AI Inline lets you call the assistant inside your current app to rewrite, translate, or generate text without switching windows.

-

Can I create custom commands?

Yes. You can build reusable AI commands and templates to speed up repetitive tasks like summarize, expand, or refactor.

-

Is BoltAI suitable for teams?

Yes. The license manager helps manage activations, and multi‑model support fits varied team needs and compliance preferences.

-

How does BoltAI handle privacy?

You choose the model provider. Local models keep data on device, while cloud requests are processed by the selected provider under their policies.